Photography notes: Difference between revisions

m (→On digital ISO) |

|||

| (24 intermediate revisions by the same user not shown) | |||

| Line 64: | Line 64: | ||

* Which can be seen as more sensitivity, | * Which can be seen as more sensitivity, | ||

: since it's just amplification, it increases the amount of signal as much as the noise | : since it's just amplification, it increases the amount of signal as much as the noise | ||

: ...but it's a ''little'' more interesting than that due to the pragmatics of photography | : ...but it's a ''little'' more interesting than that due to the pragmatics of photography (and some subtleties of electronics) | ||

* You generally want to set it as low as sensible for a situation, or leave it on auto | * You generally want to set it as low as sensible for a situation, or leave it on auto | ||

ISO in film | ISO in film indicates the grain size in photo rolls (see [https://en.wikipedia.org/wiki/Film_speed#ISO_5800 ISO 5800]). | ||

This amounts to: Smaller grains bring out finer detail but require more light to react for the same amount of image; larger grains is coarser but more sensitive. | |||

ISO in digital photography (see also ISO 12232) is different. It refers to the amplification used during the (at this stage still analog) readout of sensor rows - basically, | ISO in digital photography (see also ISO 12232) is different. | ||

It refers to the amplification used during the (at this stage still analog) readout of sensor rows - basically, what gain to use before feeding the signal to the ADC. | |||

...okay, but what does that do? What does it amount to in practice? | |||

| Line 553: | Line 556: | ||

--> | --> | ||

==Infrared== | ==Lens hoods== | ||

<!-- | |||

The plastic tube/crown looking things you stick on front of your lens. | |||

The main reason is to avoid strong light that is ''slightly'' out of frame from entering your lens. That light wouldn't hit the sensor directly, but would light up the side inside the lens, and reflect some of that to the sensor. | |||

The result of this is light pollution and creates a washed out look. | |||

This is distinct from lens flares. | |||

They can also help as basic physical (the bump-into-things sort) protection of the frontmost glass. | |||

Many are the simple tube shape. | |||

The funny-shaped ones are for wider-angle lenses, staying just out of shot for them but still giving some protection. | |||

(Consider that the camera gets a rectangular view on the world. This shape is what you get if you cut that out of a tube. | |||

Since that rectangle is wider in the left-right direction, that's where the shorter bits of these hoods go) | |||

--> | |||

=Random hints and factoids= | |||

=Infrared= | |||

{{stub}} | |||

==Infrared and film== | |||

==Infrared and digital sensors== | |||

Bare optical camera sensor's construction typically means they have sensitivity that runs off into UV on one end and into IR on the other. | |||

Note that with just how large the range of infrared is, that's only [[near-infrared]], and then only a smallish part of ''that''. | |||

For reference, our eyes see ~700nm (~red) to ~400nm (~violet) (what we call visible light), | |||

while {{imagesearch|(cmos OR ccd) infrared sensitivity|CCD and CMOS image sensors might see perhaps 1000nm to ~350nm}}. | |||

<!--(you could further extend this with scintillators) | |||

include all of infrared 1000000nm | |||

--> | |||

The amount and shape of that overall sensitivity varies, but tl;dr: they look slightly into near-IR (also slightly into UV-A on the other end). | |||

This is mostly a bug rather than a feature, so there is usually specifically a filter to remove that sensitivity. | |||

'''Changing that sensitivity''' | |||

Broadly speaking, there are ''two'' things we might call "infrared filters": IR-cut and IR-pass, which do exactly opposite things of each other. | |||

* '''IR-cut filters''' are the thing already mentioned above: on optical image sensors, these will remove most of that IR response | |||

:: because it turns out to be easier to add a separate infrared-cut filter than to design the sensor itself to be more selective{{verify}} | |||

:: usually a glass filter right on top of the camera image sensor, because you'd want it always and built in | |||

:: they look transparent, with a bluish tint from most angles (because they also remove a little visible red) | |||

:: cuts a good range ''above'' some point, or rather transition, often somewhere around 740...800nm | |||

:: Since it's a transition, bright near-IR might still be visible, particularly for closer wavelengths. For example, IR remote controls are often in the 840..940nm range, and send short but intense pulses, which tends to still be (barely) visible. But there aren't a lot of bright near-IR sources that aren't also bright in visible light, so this smaller leftover sensitivity just doesn't matter much. | |||

* '''IR-pass, visible-cut filters''' | |||

:: often look near-black | |||

:: cut everything ''below'' a transition, somewhere around 720..850nm range | |||

:: using these on a camera that has an IR-cut will give you very little signal (it's much like an audio highpass and lowpass set to about the same frequency - you'll have very little response left) | |||

:: but on a camera with IR sensitivity, this lets you view mostly IR ''without'' much of the optical | |||

Notes: | |||

<!-- | |||

* if you look for infrared photography images, the things with the stark contrast, that's mostly | |||

--> | |||

* DIY versus non-DIY NIR cameras | |||

image sensors are usually made for color, which means each pixel has its own color filter. Even if you remove the IR filter, each of these may also filter out some IR | |||

:: as such, even though you can modify a many optical sensors to detect IR, they'll never do so ''quite'' as well as a specific-purpose one, but it's close enough for creative purposes | |||

:: There are also NIR cameras, which are functionally much like converted handhelds, but a little more refined than most DIYing, because they select sensors that look further into NIR {{verify}} | |||

* If your camera's IR-cut is a physically separate filter, rather than a coating, you can removing that to get back the sensitivity that the sensor itself has | |||

: doing so leaves you with something that looks ''mostly'' like regular optical, but stronger infrared sources will look like a pink-ish white. | |||

:: White-ish because IR above 800nm or so passes through all of the [[bayes filter]] color filters similar | |||

:: pink-ish mostly because infrared is next to red so the red and it passes it more, so red tends to dominate. | |||

::: This is why IR photographers may use a color filter to reduce visible red -- basically so that the red pixels pick up mostly IR, not visible-red-and-IR. | |||

:::: Which is just as false-color as before but looks a little more contrasty. | |||

:::: These images may then be color corrected to appear more neutral, which tends to come out as white-and-blueish | |||

* visible-and-IR mixes will look somewhat fuzzy, because IR has its own focal point, so in some situations you would the visible-cut, IR-pass filter | |||

* You can't make a thermal camera with such DIYing - those sensors are significantly different. | |||

: Thermographic cameras often sensitive to a larger range, like 14000nm to 1000 nm, the point being that they focus more on [[mid-infrared]] than near-infrared | |||

In photography, base cameras and lens filters give you options like: | |||

: cutting IR and some red - similar to a regular blue filter | |||

: passing only IR | |||

: passing IR plus blue - happens to be useful for crop analysis{{verify}} | |||

: passing IR plus all visible | |||

: passing everything (including the little UV) | |||

For some DIY, like FTIR projects, | |||

note that there are ready-made solutions, such as the Raspberry's NoIR camera | |||

If you want to do this yourself, | |||

you may want to remove a webcam's IR-cut filter - and possibly put in an IR-pass filter. | |||

* In webcams the IR-cut tends to be a glass in the screwable lense | |||

:: which may just be removable | |||

* For DSLRs the IR-cut this is typically a layer on top of the sensor | |||

:: which can be much harder to deal with | |||

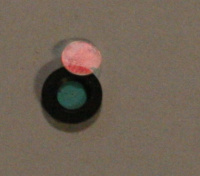

[[File:IR-cut from webcam.jpg|200px|thumb|right|two IR-cut filters from webcams. Looking through them looks blue, they reflect red.]] | |||

While in DSLRs the IR-cut is typically one of a few layers mounted on top of the sensor (so that not every lens has to have it), | |||

in webcams, the IR-cut filter may well be on the back of the lens assembly | |||

<!-- | <!-- | ||

You can get IR-pass filters as foil. | |||

Apparently floppy disks are a halfway decent DIY alternative, | |||

except they block a bunch of everything (including IR) and their transmission band seems fairly narrow{{verify}}). | |||

Indoor, a good source of IR can help (outdoor too but you need a ''bunch''). | |||

Lightbulbs are decent (actually give off about as much IR as visible light), CFLs not so much. | |||

Candles are also great IR sources. | |||

There are also constructions with a lot of IR LEDs. | |||

Of course, if a camera does proper IR cut, adding such an IR-pass,-visible-cut filter will have the net effect of blocking out almost everything, which means you'll see nothing, or very grainy images at best. | |||

In general, you can get better IR sensitivity by ''also'' taking out the IR-cut filter. | |||

Depending on the camera, this may be a pretty delicate operation, and tends to be fairly permanent. | |||

--> | |||

See also: | |||

* http://dpfwiw.com/ir.htm#ir_filters | |||

* https://kolarivision.com/articles/choosing-a-filter/ | |||

<!-- | |||

Can you see through clothes? | |||

Short answer no - being sensitive to NIR just means being sensitive to a different wavelength, | |||

: a wavelength that most clothes are opaque to | |||

: in fact a ''lot'' of materials work similarly enough in IR | |||

:: but may be a ''little'' more transparent or opaque, e.g. black cotton will look a little brighter in IR than in in optical {{verify}} | |||

: some are more opaque in IR than optical (like glass), | |||

: some are ''moderately'' transparent to NIR (silicon, seemingly mostly synthetics and then primarily when thin[https://physics.stackexchange.com/questions/3750/why-is-a-plastic-bag-transparent-in-infrared-light]), | |||

:: | |||

A lot of security cameras are sensitive to NIR, because it means you have a choice of adding a visible floodlight OR an infrared floodlight. | |||

When sony had a kerfuffle about cameras that "had the technology to see through people's clothes", | |||

it's basically the same: usable without the IR-cut filter, and with an infrared light. | |||

The only real difference seems to be that you could turn that on in the day, which meant | |||

that for the select few synthetic clothes that were relatively transparent to NIR, | |||

Actually transparent basically doesn't happen, but there are some loosely woven, sheer, or very thin fabrics. | |||

And note that sheer fabrics are already more transparent in bright lighting - like a photocamera with a flash. | |||

So there were a number of cases you could get a usually-still-vague outline of something you couldn't see with your eyes. | |||

No, it did not make the world a nudist colony, not anywhere close. | |||

It's more of a case of "we can't have nice tools because someone was a perv and someone else a prude about it". | |||

--> | --> | ||

==Thermal camera== | |||

<!-- | <!-- | ||

{{stub}} | |||

Most thermal cameras (a.k.a. thermographic cameras, thermal imagers) are sensitive to roughly 1μm to 14 μm[https://en.wikipedia.org/wiki/Thermographic_camera] (mostly in [[mid-infrared]]), and the amount of heat emitted in that range correlates well to temperature -- given a few assumptions about emissivity. | |||

Note that while these are often called cameras, most are designed functional tools responding to that range ''only'', and will not easily make pretty pictures - [https://www.smithsonianmag.com/science-nature/this-photographer-shoots-portraits-with-a-thermal-camera-1437109/ though you can]. | |||

The properties of objects in this range is a little different from your optical intuitions. | |||

[[Image:LesliesCube.png|thumb|right|300px|See also [https://en.wikipedia.org/wiki/Emissivity Wikipedia:Emissivity]] | |||

The image on the right is a good example of what emissivity does to your readings: | |||

all surfaces of this aluminium are the same ''temperature'', | |||

but only the painted surfaces have high emissivity (>0.9) so will show up as almost the correct temperature. | |||

The two bare surfaces barely show up clearly above ambient, but nowhere near the actual temperature. | |||

The polished bare aluminium side acts like a good reflector in infrared as well as optical ranges, the matte bare aluminum shows is somewhat poorer reflector. | |||

So when you use one of these out there, | |||

remember it's only a good temperature sensor for non-reflective matte surfaces, | |||

but in particular metal will fool you, and glass might (glass is emissive, unless it has a low-E coating). | |||

Since you're likely to point these things at buildings: the emissivity of a lot of materials is somewhere around 0.8..0.9 [https://en.wikipedia.org/wiki/Emissivity#Emissivities_of_common_surfaces] so the often-default setting of 0.9 is a decent tradeoff. | |||

Assume glossy materials may be down in the the 0.6 to 0.3 range, and down to almost zero is possible though not common. | |||

If you want to measure things like heatsinks, consider sticking on something like a bit of [[kapton tape]] - it's a decent tradeoff of | |||

indicating decently, | |||

won't burn even if it's pretty hot, and | |||

is removable without a mess. | |||

'''Sensor specs''' | |||

Classically these were order of 32x32, read out at less than 9fps, for hundreds of bucks, | |||

hundreds of pixels to a side and/or ~25fps only if you wanted to pay a lot more. | |||

Recent developments, and competition, have made price-performance recognizably better. | |||

'''A separate optical camera''' will give you better reference of ''what'' is hot. | |||

Depending on what you're doing this may barely be necessary, | |||

because when there is enough variation, you can usually recognize the shapes. | |||

Also, the closer you are, the more that the fact that this is a separate camera at an offset means it won't line up perfectly anyway. | |||

I've seen some people make a jig and put a regular camera next to it, for a separate, higher-quality image. | |||

--> | --> | ||

Latest revision as of 04:29, 4 February 2024

Flash

Digital

Digital raw formats

On digital ISO

tl;dr:

- higher ISO means more gain during readout of the sensor.

- Which can be seen as more sensitivity,

- since it's just amplification, it increases the amount of signal as much as the noise

- ...but it's a little more interesting than that due to the pragmatics of photography (and some subtleties of electronics)

- You generally want to set it as low as sensible for a situation, or leave it on auto

ISO in film indicates the grain size in photo rolls (see ISO 5800).

This amounts to: Smaller grains bring out finer detail but require more light to react for the same amount of image; larger grains is coarser but more sensitive.

ISO in digital photography (see also ISO 12232) is different.

It refers to the amplification used during the (at this stage still analog) readout of sensor rows - basically, what gain to use before feeding the signal to the ADC.

...okay, but what does that do? What does it amount to in practice?

Given a sensor with an image currently in it, the only change it would really make to the readout

is brightness, not signal to noise, or quality in any way.

In that sense, it has no direct effect on the amount of light accumulated in the sensor. However, since it is one of the physical parameters the camera chooses (alongside aperture and shutter time), it can choose to trade off one for another.

For example, in the dark, a camera on full auto is likely to choose a wide open aperture (for the most light), and then choose a higher ISO if that means the shutter time can be lowered to not introduce too much motion blur from shaking hands.

There are other such tradeoffs, e.g. controlled via modes -- for example, portrait mode tries to open the aperture so the background is blurred, sports mode aiming for short shutter time so you get minimal motion blur, but at the cost of noise, and more. But most of these are explained mostly in terms of the aperture/shutter tradeoff, and ISO choice is relatively unrelated, and can be explained as "as low as is sensible for the light level".

Still, you can play with it.

Note that the physical parameters are chosen with the sensor in mind - to not saturate its cells (over-exposure) {{comment|(also other constraints, like avoiding underexposure, signal falling into the noise floor, and in general also tries to use much of storage range it has (also to avoid unnecessary quantization, though this is less important.)

As such, when you force a high ISO (i.e. high gain) but leave everything else auto, you will effectively force a camera to choose a lower shutter time and/or smaller aperture.

Which means less actual light being used to form the image, which implies lower signal-to-noise (because a noise floor is basically a constant). The noise is usually still relatively low, but the noise can become noticeable e.g. in lower-light conditions.

Similarly, when you force a low ISO, the camera must plan for more light coming in, often meaning a longer exposure time.

On a tripod this can mean nicely low-noise images, while in your hands it typically means shaky-hand blur.

In a practical sense: when you have a lot of light, somewhat low ISO gives an image with less noise.

When you have little light, high ISO lets you bring out what's there, with inevitable noise.

Leaving it on auto tends to do something sensible.

More technical details

On a sensor's dynamic range and bits

Lens hoods

Random hints and factoids

Infrared

Infrared and film

Infrared and digital sensors

Bare optical camera sensor's construction typically means they have sensitivity that runs off into UV on one end and into IR on the other.

Note that with just how large the range of infrared is, that's only near-infrared, and then only a smallish part of that.

For reference, our eyes see ~700nm (~red) to ~400nm (~violet) (what we call visible light), while CCD and CMOS image sensors might see perhaps 1000nm to ~350nm.

The amount and shape of that overall sensitivity varies, but tl;dr: they look slightly into near-IR (also slightly into UV-A on the other end).

This is mostly a bug rather than a feature, so there is usually specifically a filter to remove that sensitivity.

Changing that sensitivity

Broadly speaking, there are two things we might call "infrared filters": IR-cut and IR-pass, which do exactly opposite things of each other.

- IR-cut filters are the thing already mentioned above: on optical image sensors, these will remove most of that IR response

- because it turns out to be easier to add a separate infrared-cut filter than to design the sensor itself to be more selective(verify)

- usually a glass filter right on top of the camera image sensor, because you'd want it always and built in

- they look transparent, with a bluish tint from most angles (because they also remove a little visible red)

- cuts a good range above some point, or rather transition, often somewhere around 740...800nm

- Since it's a transition, bright near-IR might still be visible, particularly for closer wavelengths. For example, IR remote controls are often in the 840..940nm range, and send short but intense pulses, which tends to still be (barely) visible. But there aren't a lot of bright near-IR sources that aren't also bright in visible light, so this smaller leftover sensitivity just doesn't matter much.

- IR-pass, visible-cut filters

- often look near-black

- cut everything below a transition, somewhere around 720..850nm range

- using these on a camera that has an IR-cut will give you very little signal (it's much like an audio highpass and lowpass set to about the same frequency - you'll have very little response left)

- but on a camera with IR sensitivity, this lets you view mostly IR without much of the optical

Notes:

- DIY versus non-DIY NIR cameras

image sensors are usually made for color, which means each pixel has its own color filter. Even if you remove the IR filter, each of these may also filter out some IR

- as such, even though you can modify a many optical sensors to detect IR, they'll never do so quite as well as a specific-purpose one, but it's close enough for creative purposes

- There are also NIR cameras, which are functionally much like converted handhelds, but a little more refined than most DIYing, because they select sensors that look further into NIR (verify)

- If your camera's IR-cut is a physically separate filter, rather than a coating, you can removing that to get back the sensitivity that the sensor itself has

- doing so leaves you with something that looks mostly like regular optical, but stronger infrared sources will look like a pink-ish white.

- White-ish because IR above 800nm or so passes through all of the bayes filter color filters similar

- pink-ish mostly because infrared is next to red so the red and it passes it more, so red tends to dominate.

- This is why IR photographers may use a color filter to reduce visible red -- basically so that the red pixels pick up mostly IR, not visible-red-and-IR.

- Which is just as false-color as before but looks a little more contrasty.

- These images may then be color corrected to appear more neutral, which tends to come out as white-and-blueish

- This is why IR photographers may use a color filter to reduce visible red -- basically so that the red pixels pick up mostly IR, not visible-red-and-IR.

- visible-and-IR mixes will look somewhat fuzzy, because IR has its own focal point, so in some situations you would the visible-cut, IR-pass filter

- You can't make a thermal camera with such DIYing - those sensors are significantly different.

- Thermographic cameras often sensitive to a larger range, like 14000nm to 1000 nm, the point being that they focus more on mid-infrared than near-infrared

In photography, base cameras and lens filters give you options like:

- cutting IR and some red - similar to a regular blue filter

- passing only IR

- passing IR plus blue - happens to be useful for crop analysis(verify)

- passing IR plus all visible

- passing everything (including the little UV)

For some DIY, like FTIR projects,

note that there are ready-made solutions, such as the Raspberry's NoIR camera

If you want to do this yourself,

you may want to remove a webcam's IR-cut filter - and possibly put in an IR-pass filter.

- In webcams the IR-cut tends to be a glass in the screwable lense

- which may just be removable

- For DSLRs the IR-cut this is typically a layer on top of the sensor

- which can be much harder to deal with

While in DSLRs the IR-cut is typically one of a few layers mounted on top of the sensor (so that not every lens has to have it), in webcams, the IR-cut filter may well be on the back of the lens assembly

See also: