Display types: Difference between revisions

| (20 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

= | =Few-element= | ||

== | ==Lighting== | ||

===Nixie tubes=== | |||

[[Image:Nixie2.gif|thumb|right|]] | |||

<!-- | <!-- | ||

Nixie tubes were some of the earliest outputs of computers | |||

They have been a inefficient solution since the transistor or so. | |||

But they're still pretty. | |||

[[Lightbulb_notes#Nixie_tubes]] | |||

--> | |||

<br style="clear:both"> | |||

===Eggcrate display=== | |||

<!-- | |||

An eggcrate display is a number of often-incandescent, often-smallish lighbulbs in a grid (often 5 by 7), | |||

named for the pattern of round cutouts | |||

These were bright, and primarily used in gameshows, presumably because they would show up fine even in bright studio lighting. | |||

Note that when showing $0123456789, not all bulbs positions are necessary. | |||

--> | --> | ||

=== | ==Mechanical== | ||

===Mechanical counter=== | |||

https://en.wikipedia.org/wiki/Mechanical_counter | |||

===Split-flap=== | |||

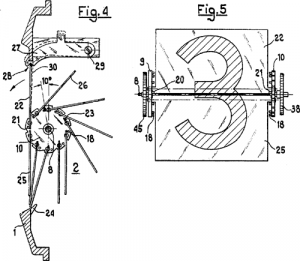

[[Image:Split-flap diagram.png|thumb|right]] | |||

<!-- | <!-- | ||

If you're over thirty or so, you'll have seen these at airports. There's a few remaining now, but only a few. | |||

They're somehow satisfying to many, and that rustling sound is actually nice feedback on when you may want to look at the board again. | |||

They are | They are entirely mechanical, and only need to be moderately precise -- well, assuming they only need ~36 or so characters. | ||

https://www.youtube.com/watch?v=UAQJJAQSg_g | |||

--> | --> | ||

https:// | https://en.wikipedia.org/wiki/Split-flap_display | ||

<br style="clear:both"/> | |||

===Vane display=== | |||

===Flip-disc=== | |||

https://en.wikipedia.org/wiki/Flip-disc_display | |||

===Other flipping types=== | |||

<!-- | |||

--> | |||

==LED segments== | |||

===7-segment and others=== | |||

{{stub}} | |||

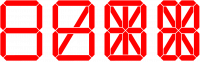

[[File:Segment displays.png|thumb|right|200px|7-segment, | |||

9-segment display, 14-segment, and 16-segment display. If meant for numbers will be a dot next to each (also common in general), if meant for time there will be a colon in one position.]] | |||

These are really just separate lights that happen to be arranged in a useful shape. | |||

Very typically LEDs (with a common cathode or anode), though similar ideas are sometimes implemented in other display types - notably the electromechanical one, and also sometimes VFD. | |||

Even the simplest, 7-segment LED involves a bunch of connectors so are | |||

* often driven multiplexed, so only one of them is on at a time. | |||

* often done via a controller that handles that multiplexing for you<!-- | |||

: which one depends on context, e.g. is it a BCD-style calculator, a microcontroller; what interface is more convenient for you | |||

:: if you're the DIY type who bought a board, you may be looking at things like the MAX7219 or MAX7221, TM1637 or TM1638, HT16K33, 74HC595 (shift register), HT16K33 | |||

--> | |||

Seven segments are the minimal and classical case, | |||

good enough to display numbers and so e.g. times, but not really for characters. | |||

More-than-7-segment displays are preferred for that. | |||

https://en.wikipedia.org/wiki/Seven-segment_display | |||

==DIY== | |||

===LCD character dislays=== | |||

Character displays are basically those with predefined (and occasionally rewritable) fonts. | |||

=== | ====Classical interface==== | ||

The more barebones interface is often a 16 pin line with a pinout like | |||

* Ground | |||

* Vcc | |||

* Contrast | |||

: usually there's a (trim)pot from Vcc, or a resistor if it's fixed | |||

* RS: Register Select (character or instruction) | |||

: in instruction mode, it receives commands like 'clear display', 'move cursor', | |||

: in character mode, | |||

* RW: Read/Write | |||

: tied to ground is write, which is usually the only thing you do | |||

* ENable / clk (for writing) | |||

* 8 data lines, but you can do most things over 4 of them | |||

* backlight Vcc | |||

* Backlight gnd | |||

The minimal, write-only setup is: | |||

* tie RW to ground | |||

* connect RS, EN, D7, D6, D5, and D4 to digital outs | |||

====I2C and other==== | |||

<!-- | |||

Basically the above wrapped in a controller you can address via I2C or SPI (and usually they then speak that older parallel interface) | |||

Sometimes these are entirely separate ones bolted onto the classical interface. | |||

For DIY, you may prefer these just because it's less wiring hassle. | |||

--> | |||

===Matrix displays=== | |||

===(near-)monochrome=== | |||

====SSD1306==== | |||

OLED, 128x64@4 colors{{vierfy}} | |||

https://cdn-shop.adafruit.com/datasheets/SSD1306.pdf | |||

====SH1107==== | |||

OLED, | |||

https://datasheetspdf.com/pdf-file/1481276/SINOWEALTH/SH1107/1 | |||

===Small LCD/TFTs / OLEDs=== | |||

{{stub}} | |||

Small as in order of an inch or two (because the controllers are designed for a limited resolution?{{verify}}). | |||

{{zzz|Note that, like with monitors, marketers really don't mind if you confuse LED-backlit LCD with OLED, | |||

[[ | and some of the ebays and aliexpresses sellers of the world will happily 'accidentally' | ||

call any small screen OLED if it means they sell more. | |||

This is further made more confusing by the fact that there are | |||

* few-color OLEDs (2 to 8 colors or so, great for high contrast but ''only'' high cotnrast), | |||

* [[high color]] OLEDs (65K), | |||

...so you sometimes need to dig into the tech specs to see the difference between high color LCD and high color OLED. | |||

}} | |||

<!-- | <!-- | ||

[[Image:OLED.jpg|thumb|300px|right|Monochrome OLED]] | |||

[[Image:OLED.jpg|thumb|300px|right|High color OLED]] | |||

[[Image:Not OLED.jpg|thumb|400px|right|Not OLED (clearly backlit)]] | |||

--> | |||

When all pixels are off they give zero light pollution (unlike most LCDs) which might be nice in the dark. | |||

These seem to appear in smaller sizes than small LCDs, so are great as compact indicators. | |||

'''Can it do video or not?''' | |||

If it ''does'' speak e.g. MIPI it's basically just a monitor, probably capable of decent-speed updates, but also the things you ''can'' connect to will (on the scale of microcontroller to mini-PC) be moderately powerful, e.g. a raspberry. | |||

But the list below don't connect PC video cables. | |||

Still, they have their own controller, and can hold their pixel state one way or the other, but connect something more command-like - so you can update a moderate amount of pixels with via an interface that is much less speedy or complex. | |||

You might get reasonable results over SPI / I2C for a lot of e.g. basic interfaces and guages. | |||

By the time you try to display video you have to think about your design more. | |||

For a large part because amount of pixels to update times the rate of frames per second has to fit through the communication (...also the display's capabilities). | |||

There is a semi-standard parallel interface that might make video-speed things feasible. | |||

This interface is faster than the SPI/I2C option, though not always ''that'' much, depending on hardware details. | |||

Even if the specs of the screen can do it in theory, you also have to have the video ready to send. | |||

If you're running it from an RP2040 or ESP32, don't expect to libav/ffmpeg. | |||

Say, something like the {{imagesearch|tinycircuits tinytv|TinyTV}} runs a 216x135 65Kcolor display from a from a [[RP2040]]. | |||

Also note that such hardware won't be doing decoding and rescaling arbitrary video files. | |||

They will use specifically pre-converted video. | |||

In your choices, also consider libraries. | |||

Things like [https://github.com/Bodmer/TFT_eSPI TFT_eSPI] has a compatibility list you will care about. | |||

====Interfaces==== | |||

{{stub}} | |||

<!-- | |||

* 4-line SPI | |||

* 3-line SPI ([[half duplex]], basically) | |||

* I2C | |||

* 6800-series parallel | |||

* 8080-series parallel interface | |||

The last two are 8-bit parallel interfaces. ''In theory'' these can be multiples faster, | |||

though notice that in some practice you are instead limited by the display's controller, | |||

your own ability to speak out data that fast, and the difference may not even be twice | |||

(and note that [[bit-banging]] that parallel may take a lot more CPU than dedicated SPI would). | |||

- | The numbers aren't about capability, they seem to purely references then Intel versus Motorola origins of their specs{{verify}}) | ||

They are apparently very similar - the main differences being the read/write and enable, and in some timing. | |||

: If they support both, 8080 seems preferable, in part because some only support that?{{verify}} | |||

There are others that aren't quite ''generic'' high speed moniutor interfaces yet, | |||

but too fast for slower hardware (e.g. CSI, MDDI) | |||

https://forum.arduino.cc/t/is-arduino-6800-series-or-8080-series/201241/2 | |||

--> | |||

====ST7735==== | |||

LCD, 132x162@16bits RGB | |||

<!-- | |||

* SPI interface (or parallel) | |||

* 396 source line (so 132*RGB) and 162 gate line | |||

* display data RAM of 132 x 162 x 18 bits | |||

* 2.7~3.3V {{verify}} | |||

- | Boards that expose SPI will have roughly: | ||

: GND: power supply | |||

: VCC: 3.3V-5.0V | |||

: SCL: SPI clock line | |||

: SDA: SPI data line | |||

: RES: reset | |||

: D/C: data/command selection | |||

: CS: chip Selection interface | |||

: BLK: backlight control (often can be left floating, presumably pulled up/down) | |||

Lua / NodeMCU: | |||

* [https://nodemcu.readthedocs.io/en/release/modules/ucg/ ucg] | |||

* [https://nodemcu.readthedocs.io/en/release/modules/u8g2/ u8g2] | |||

* https://github.com/AoiSaya/FlashAir-SlibST7735 | |||

Arduino libraries | |||

* https://github.com/adafruit/Adafruit-ST7735-Library | |||

* https://github.com/adafruit/Adafruit-GFX-Library | |||

These libraries may hardcode some of the pins (particularly the SPI ones), | |||

and this will vary between libraries. | |||

'''ucg notes''' | |||

Fonts that exist: https://github.com/marcelstoer/nodemcu-custom-build/issues/22 | |||

fonts that you have: for k,v in pairs(ucg) do print(k,v) end | |||

http://blog.unixbigot.id.au/2016/09/using-st7735-lcd-screen-with-nodemcu.html | |||

--> | |||

==== | ====ST7789==== | ||

LCD, 240x320@16bits RGB | |||

https://www.waveshare.com/w/upload/a/ae/ST7789_Datasheet.pdf | |||

====SSD1331==== | |||

OLED, 96x 64, 16bits RGB | |||

https://cdn-shop.adafruit.com/datasheets/SSD1331_1.2.pdf | |||

====SSD1309==== | |||

OLED, 128 x 64, single color? | |||

https://www.hpinfotech.ro/SSD1309.pdf | |||

====SSD1351==== | |||

OLED, 65K color | |||

https://newhavendisplay.com/content/app_notes/SSD1351.pdf | |||

====HX8352C==== | |||

LCD | |||

==== | |||

<!-- | <!-- | ||

240(RGB)x480, 16-bit | |||

--> | |||

https://www.ramtex.dk/display-controller-driver/rgb/hx8352.htm | |||

====HX8357C==== | |||

====R61581==== | |||

<!-- | |||

240x320 | |||

--> | --> | ||

=== | ====ILI9163==== | ||

LCD, 162x132@16-bit RGB | |||

http://www.hpinfotech.ro/ILI9163.pdf | |||

=== | ====ILI9341==== | ||

<!-- | |||

240RGBx320, 16-bit | |||

--> | |||

https://cdn-shop.adafruit.com/datasheets/ILI9341.pdf | |||

==== | ====ILI9486==== | ||

LCD, 480x320@16-bit RGB | |||

https://www.hpinfotech.ro/ILI9486.pdf | |||

====ILI9488==== | |||

LCD | |||

<!-- | |||

320(RGB) x 480 | |||

--> | |||

https://www.hpinfotech.ro/ILI9488.pdf | |||

====PCF8833==== | |||

LCD, 132×132 16-bit RGB | |||

https:// | https://www.olimex.com/Products/Modules/LCD/MOD-LCD6610/resources/PCF8833.pdf | ||

=== | ====SEPS225==== | ||

LCD | |||

https://vfdclock.jimdofree.com/app/download/7279155568/SEPS225.pdf | |||

====RM68140==== | |||

LCD | |||

<!-- | <!-- | ||

320 RGB x 480 | |||

--> | --> | ||

https://www.melt.com.ru/docs/RM68140_datasheet_V0.3_20120605.pdf | |||

====GC9A01==== | |||

LCD, 65K colors, SPI | |||

Seem to often be used on round displays{{verify}} | |||

https://www.buydisplay.com/download/ic/GC9A01A.pdf | |||

[[Category:Computer]] | |||

[[Category:Hardware]] | |||

===Epaper=== | |||

====SSD1619==== | |||

https://cursedhardware.github.io/epd-driver-ic/SSD1619A.pdf | |||

<!-- | |||

====UC8151==== | |||

https://www.orientdisplay.com/wp-content/uploads/2022/09/UC8151C.pdf | |||

--> | |||

=Many-element - TV and monitor notes (and a little film)= | |||

==Backlit flat-panel displays== | |||

<!-- | |||

We may call them LCD, but that was an early generation | |||

LCD and TFT and various other acronyms are all the same idea, with different refinements on how the pixels work exactly. | |||

There are roughly two parts of such monitors you can care about: How the backlight works, and how the pixels work. | |||

But almost all of them come down to | |||

* pixels will block light, or less so. | |||

* put a bright lights behind those | |||

: in practice, they are on the side, and there is some trickery to try to reflect that as uniformly as possible | |||

There are a lot of acronyms pointing tou | |||

: TN and IPS is more about the crystals (and you mostly care about that if you care about viewing angle), | |||

: TFT is more about the electronics, but the two aren't really separable, | |||

: and then there are a lot of experiments (with their own acronyms) that | |||

https://en.wikipedia.org/wiki/TFT_LCD | |||

TFT, UFB, TFD, STN | |||

--> | |||

===CCFL or LED backlight=== | |||

<!-- | |||

Both refer to a global backlight. | |||

It's only things like OLED and QLED that do without. | |||

- | CCFLs, Cold-Cathode Fluorescnt Lamps, are a variant of [[fluorescent lighting]] that (surprise) runs a lot colder than some other designs. | ||

CCFL backlights tend to pulse at 100+ Hz{{verify}}, though because they necessarily use phosphors, and those can easily made to be slow, it may be a ''relatively'' steady pulsing. | |||

They are also high voltage devices. | |||

* | LED backlights are often either | ||

* | * [[PWM]]'d at kHz speeds{{verify}}, | ||

* current-limited{{verify}}, which are both smoother. | |||

--> | |||

: | https://nl.wikipedia.org/wiki/CCFL | ||

==Self-lit== | |||

===OLED=== | |||

{{stub}} | |||

While OLED is also a thing in lighting, OLED ''usually'' comes up in the context of OLED displays. | |||

It is mainly contrasted with backlit displays (because it is hard to get those to block all light). | |||

OLEDs being off just emit no light at all. So the blacks are blacker, you could go brighter at the same time, | |||

There are some other technical details why they tend to look a little crisper. | |||

Viewing angles are also better, ''roughly'' because the light source is closer to the surface. | |||

OLED are organic LEDs, which in itself party just a practical production detail, and really just LEDs. | |||

{{comment|(...though you can get fancy in the production process, e.g. pricy see-through displays are often OLED with substate trickery{{verify}})}} | |||

PMOLED versus AMOLED makes no difference to the light emission, | |||

just to the way we access them (Passive Matrix, Active Matrix). | |||

AMOLED can can somwhat lower power, higher speed, and more options along that scale{{verify}}, | |||

all of which makes them interesting for mobile uses. It also scales better to larger monitors. | |||

POLED (and confusingly, pOLED is a trademark) uses a polymer instead of the glass, | |||

so is less likely to break but has other potential issues | |||

<!-- | |||

'''Confusion''' | |||

"Isn't LED screen the same as OLED?" | |||

No. | |||

Marketers will be happy if you confuse "we used a LED backlight instead of a CCFL" (which we've been doing for ''ages'') | |||

with "one of those new hip crisp OLED thingies", while not technically lying, | |||

so they may be fuzzy about what they mean with "LED display". | |||

You'll know when you have an OLED monitor, because it will cost ten times as much - a thousand USD/EUR, more at TV sizes. | |||

The cost-benefit for people without a bunch of disposable income isn't really there. | |||

"I heard al phones use OLED now?" | |||

Fancier, pricier ones do, yes. | |||

Cheaper ones do not, because the display alone might cost on the order of a hundred bucks.{{verify}} | |||

--> | |||

===QLED=== | |||

<!-- | |||

It's quantum, so it's buzzword compatible. How is it quantum? Who knows! | |||

It may surprise you that this is LCD-style, not OLED-style, | |||

but is brighter than most LCD style, | |||

they're still working on details like decent contrast. | |||

Quantum Dot LCD https://en.wikipedia.org/wiki/Quantum_dot_display | |||

--> | |||

==On image persistence / burn-in== | |||

<!-- | <!-- | ||

CRTs continuously illuminating the same pixels would somewhat-literally cook their phosphors a little, | |||

-- | leading to fairly-literal image burn-in. | ||

Other displays will have similar effects, but it may not be ''literal'' burn in, so we're calling it image persistence or image retention now. | |||

'''LCD and TFT''' have no ''literal'' burn-in, but the crystals may still settle into a preferred state. | |||

: there is limited alleviation for this | |||

'''Plasma''' still has burn-in. | |||

'''OLED''' seems to as well, though it's subtler. | |||

Liquid crystals (LCD, TFT, etc.) have an persisting-image effect because | |||

of the behaviour of liquid crystals when held at the same state ''almost always''. | |||

You can roughly describe this as having a preferred state they won't easily relax out of -- but there are a few distinct causes, different sensitivity to this from different types of panels, and different potential fixes. | |||

Also, last time I checked this wasn't ''thoroughly'' studied. | |||

Unplugging power (/ turning it off) for hours (or days, or sometimes even seconds) may help, and may not. | |||

A screensaver with white, or strong moving colors, or noise, may help. | |||

There are TVs that do something like this, like jostling the entire image over time, doing a blink at startup and/or periodically, or scanning a single dot with black and white (you probably won't notice). | |||

https://en.wikipedia.org/wiki/Image_persistence | |||

http://www.jscreenfix.com/ | |||

http://gribble.org/lcdfix/ | |||

{{search|statictv screensaver}} | |||

--> | |||

==== | ==VFD== | ||

<gallery mode="packed" style="float:right" heights="200px"> | |||

VFD.jpg|larger segments | |||

VFD-dots.jpg|dot matrix VFD | |||

</gallery> | |||

[[Vacuum Fluorescent Display]]s are vacuum tubes applied in a specific way - see [[Lightbulb_notes#VFDs]] for more details. | |||

<br style="clear:both"/> | |||

<!-- | |||

==Capabilities== | |||

===Resolution=== | |||

A TFT screen has a number of pixels, and therefore a natural resolution. Lower resolutions (and sometimes higher ones) can be displayed, but are interpolated so will not bee as sharp. Most people use the natural resolution. | |||

This may also be important for gamers, who may not want to be forced to a higher resolution for crispness than their graphics card can handle in terms of speed. | |||

For: | |||

* 17": 1280x1024 is usual (1280x768 for widescreen) | |||

* 19": 1280x1024 (1440x900 for widescreen) | |||

* 20": 1600x1200 (1680x1050 for widescreen) | |||

* 21": are likely to be 1600x1200 (1920x1200 for widescreen) | |||

Note that some screens are 4:3 (computer-style ratio), some 5:4 (tv ratio), some 16:9 or 16:10 (wide screen), but often not ''exactly'' that, pixelwise; many things opt for some multiple that is easier to handle digitally. | |||

===Refresh=== | |||

Refresh rates as they existed in CRT monitors do not directly apply; there is no line scanning going on anymore. | |||

Pixels are continuously lit, which is why TFTs don't seem to flicker like CRTs do. Still, they react only so fast to the changes in the intensity they should display at, which limits the amount of pixel changes that you will actually see per second. | |||

Longer refresh times mean moving images are blurred and you may see ghosting of brigt images. Older TFT/LCDs did something on the order of 20ms (roughly 50fps), which was is not really acceptable for gaming. | |||

However, the millisecond measure is nontrivial. The direct meaning of the number has been slaughtered primarily by number-boast-happy PR departments. | |||

The number | |||

More exactly, there are various things you can be measuring. It's a little like the speaker rating (watt RMS, watt 'in regular use', PMPO) in that a rating may refer unrealistic exhaggerations as well as strict and real measures. | |||

The argument is that even when the time for a pixel to be fully off to fully on may take 20ms, not everyone is using their monitor to induce epileptic attacks - usually the pixel is done faster, going from some grey to some grey. If you play the DOOM3 dark-room-fest, you may well see the change from that dark green to that dark blue happen in 8ms (not that that's in any way easy to measure). | |||

But a game with sharp contrasts may see slower, somewhat blurry changes. | |||

8ms is fairly usual these days. Pricier screens will do 4ms or even 2ms, which is nicer for gaming. | |||

===Video noise=== | |||

===Contrast=== | |||

The difference between the weakest and strongest brightness it can display. 350:1 is somewhat minimal, 400:1 and 500:1 are fairly usual, 600:1 and 800:1 are nice and crisp. | |||

===Brightness=== | |||

The amount of light emitted - basically the strength of the backlight. Not horribly interesting unless you like it to be bright in even a well lit room. | |||

300 cd/m2 is fairly usual. | |||

There are details like brightness uniformity - in some monitors, the edges are noticably darker when the screen is bright, which may be annoying. Some monitors have stranger shapes for their lighting. | |||

Only reviews will reveal this. | |||

===Color reproduction=== | |||

The range of colors a monitor can reproduce is interesting for photography buffs. The curve of how each color is reproduced is also a little different for every monitor, and for some may be noticeably different from others. | |||

This becomes relevant when you want a two-monitor deal; it may be hard to get a CRT and a TFT the same color, as much as it may be hard to get two different TFTs from the same manufacturer consistent. If you want perfection in that respect, get two of the same - though spending a while twiddlign with per-channel gamma correction will usually get decent results. | |||

==Convenience== | |||

=== | ===Viewing angle=== | ||

The viewing angle is a slightly magical figure. It's probably well defined in a test, but its meaning is a little elusive. | |||

Basically it indicates at which angle the discoloration starts being noticeable. Note that the brightness is almost immediately a little off, so no TFT is brilliant to show photos to all the room. The viewing angle is mostly interesting for those that have occasional over-the-shoulder watchers, or rather watchers from other chairs and such. | |||

' | The angle, either from a perpendicular line (e.g. 75°) or as a total angle (e.g. 150°). | ||

As noted, the figure is a little magical. If it says 178° the colors will be as good as they'll be from any angle, but frankly, for lone home use, even the smallest angle you can find tends to be perfectly fine. | |||

===Reflectivity=== | |||

While there is no formal measure for this, you may want to look at getting something that isn't reflective. If you're in an office near a window, this is probably about as important to easily seeing your screen as its brightness is. | |||

It seems that many glare filters will reduce your color fidelity, though. | |||

--> | |||

==Some theory - on reproduction== | |||

====Reproduction that flashes==== | |||

{{stub}} | |||

'''Mechanical film projectors''' flash individual film frames while that film is being held entirely still, before advancing that film to the next (while no light is coming out) and repeating. | |||

(see e.g. [https://www.youtube.com/watch?v%3dCsounOrVR7Q this] and note that it moves so quickly that you see ''that'' the film is taken it happens so quickly that you don't even see it move. Separately, if you slow playback you can also see that it flashes ''twice'' before it advances the film - we'll get to why) | |||

This requires a shutter, i.e. not letting through ''any'' light a moderate part of the time (specifically while it's advancing the film). | |||

We are counting on our eyes to sort of ignore that. | |||

One significant design concept very relevant to this type of reproduction is the [https://en.wikipedia.org/wiki/Flicker_fusion_threshold '''flicker fusion threshold'''], the "frequency at which intermittent light stimulus appears to be steady light" to our eyes because separately from actual image it's showing, it appearing smooth is, you know, nice. | |||

Research shows that this varies somewhat with conditions, but in most conditions practical around showing people images, that's somewhere between 50Hz and 90Hz. | |||

Since people are sensitive to flicker to varying degrees, and this can lead to eyestain and headaches, | |||

we aim towards the high end of that range whenever that is not hard to do. | |||

In fact, we did so even with film. While film is 24fps and was initially shown at 24Hz flashes, movie projectors soon introduced two-blade and then three-blade shutters, showing each image two or three times before advancing, meaning that while they still only show 24 distinct images per second, they flash it twice or three times for a ''regular'' 48Hz or 72Hz flicker. | |||

No more detail, but a bunch less eyestrain. | |||

As to what is actually being show, an arguably even more basic constraint is the rate of new images that we accept as '''fluid movement'''. | |||

: Anything under 10fps looks jerky and stilted | |||

:: or at least like a ''choice''. | |||

:: western ''and'' eastern animations were rarely higher than 12, or 8 or 6 for the simpler/cheaper ones | |||

: around 20fps we start readily accepting it as continuous movement, | |||

: above 30 or 40fps it looks smooth, | |||

: and above that it keeps on looking a little better yet, with quickly diminishing returns | |||

So | '''So why 24?''' | ||

Film's 24 was not universal at the time, and has no strong significance then or now. | |||

It's just that when a standard was needed, the number 24 was a chosen balance between various aspects, like the fact that that's enough for fluid movement and relatively few scenes need higher, and the fact that film stock is expensive, and a standard for projection (adaptable or even multiple projectors would be too expensive for most cinemas). | |||

The reason we ''still'' use 24fps ''today'' is more faceted, and doesn't really have a one-sentence answer. | |||

But part of it is that making movies go faster is not always well received. | |||

It seems that we associated 24fps to feels like movies, 50/60fps feels like shaky-cam home movies made by dad's camcorder (when those were still a thing) or sports broadcasts (which we did even though it reduced detail) with their tense, immediate, real-world associations. | |||

So higher, while technically better, was also associated with a specific aesthetic. It mat works well for action movies, yet less for others. | |||

There is an argument that 24fps's sluggishness puts us more at ease, reminds us that it isn't real, seems associated with storytelling, a dreamlike state, memory recall. | |||

Even if we can't put our finger on why, such senses persist. | |||

<!-- | |||

'''when more both is and isn't better''' | |||

And you can argue that cinematic language evolved not only with the technical limitations, but also the limitations of how much new information you can show at all. | |||

In some ways, 24fps feels a subtle slightly stylized type of video, | |||

from the framerate alone, | |||

and most directors will like this because most movies benefit from that. | |||

Exceptions include some fast paced - but even they benefit from feeling more distinct from ''other'' movies still doing that stylized thing. | |||

At the same time, a lot of seems like a learned association that will blur and may go away over time. | |||

Some changes will make things ''better'' (consider that the [[3-2 pulldown]] necessary to put 24fps movies on TV in 60Hz countries made pans look ''worse''). | |||

You can get away with even less in certain aesthetic styles - simpler cartoons may update at 8fps or 6fps, and we're conditioned enough that in that cartoon context, 12fps looks ''fancy''. But more is generally taken to be worse. It may be objectively smoother but there are so many animation choices (comparable to cinematic language) that you basically ''have'' to throw out at high framerates - or accept that it will look ''really'' jarring when it switches between them. It ''cannot'' look like classical animation/anime, for better ''and'' worse. And that's assuming it was ''made'' this way -- automatic resolution upscaling usually implies filters - they things, they are effectively a stylistic change that was not intended and you can barely control. Automatic frame interpolation generally does ''terribly'' on anime because it was trained on photographic images instead. The more stylistic the animation style, the worse it will look interpolated, never mind that it will deal poorly with intentional animation effects like [https://en.wikipedia.org/wiki/Squash_and_stretch stretch and squash] and in particular [https://en.wikipedia.org/wiki/Smear_frame smear frames]. | |||

--> | |||

====CRT screens==== | |||

{{stub}} | |||

'''Also flashing''' | |||

CRT monitors do something ''vaguely'' similar to movie projectors, in that they light up an image so-many times a second. | |||

Where with film you light up the entire thing at once {{comment|(maybe with some time with the shutter coming in and out, ignore that for now)}}. | |||

a CRT light up one spot at a time - there is a beam constantly being dragged line by line across the screen -- look at [https://youtu.be/3BJU2drrtCM?t=137 slow motion footage like this]. | |||

The phosphor will have a softish onset and retain light for some while, | |||

and | and while slow motion tends to exaggerate that a little (looks like a single line), | ||

it's still visible for much less than 1/60th of a second. | |||

The largest reason that these pulsing phosphors don't look like harsh blinking is that our persistence of vision | |||

(you could say our eyes framerate sucks, though actually this is a poor name for our eyes's actual mechanics), combined with the fact that it's relatively bright. | |||

<!-- | |||

Analog TVs were almost always 50Hz or 60Hz (depending on country). | |||

(separately, broadcast would often only show 25 or 30 new image frames, depending on the type of content content - read up on [[interlacing]] and [[three-two pull down]]). | |||

Most CRT ''monitors'', unlike TVs, can be told to refresh at different rates. | |||

There's a classical 60Hz mode that was easy to support, but people often preferred 72Hz or 75Hz or 85Hz or higher modes because they reduced eyestrain. | |||

And yes, after working behind one of those faster-rate monitors and moving to a 60Hz monitor would be ''really'' noticeable. | |||

Because even when we accept it as smooth enough, it still blinked, and we still perceive it as such. | |||

'''How do pixels get sent?''' | |||

--> | |||

====Flatscreens==== | |||

{{stub}} | |||

Flatscreens do not reproduce by blinking things at us. | |||

While in film, and in CRTs, the mechanism that lights up the screen is the is the same mechanism as the one that shows you the image, | |||

in LCD-style flatscreens, the image updates and the lighting are now different mechanisms. | |||

' | Basically, there's one overall light behind the pixely part of the screen, and each screen pixel blocks light. | ||

That global backlights tends to be lit ''fairly'' continuously. | |||

Sure there is variation in backlights, and some will still give you a little more eye strain than others. | |||

CCFL backlight phosphors seem intentionally made to decay slowly, | |||

so even if the panel is a mere 100Hz, that CCFL ''ought'' to look look much less blinky than e.g. CRT at 100Hz. | |||

LED backlights are often [[PWM]]'d at kHz speeds{{verify}}, or current-limited{{verify}}, which are both smoother. | |||

If you take a high speed camera, you may still not see it flicker [https://youtu.be/3BJU2drrtCM?t=267 this part of the same slow motion video] {{comment|(note how the backlight appears constant even when the pixel update is crawling by)}} until you get really fast and specific. | |||

So the difference between, say, a 60fps and 240fps monitor isn't in the lighting, it's how fast the light-blocking pixels in front of that constant backlight change. | |||

A 60fps monitor changes its pixels every 16ms (1/60 sec), a 240fps the latter every 4ms (1/240 sec). The light just stays on. | |||

As such, while a cRT at 30Hz would look very blinky and be hard on the eyes, | |||

a flatscreen at 30fps updates looks choppy but not like a blinky eyestrain. | |||

<!-- | |||

'''Footnotes to backlight''' | |||

Note also that dimming a PWM'd backlight screen will effectively change the flicker a little. | |||

At high speeds this should not matter perceptibly, though. | |||

. | |||

On regular screens the dimming is usually fixed, for laptop screens it may do so based on battery status as well as ambient light. | |||

There are another few reasons why both can flicker a little more than that suggests, but only mildly so. | |||

People who are more sensitive to eyestrain and related headaches will want to know the details of the backlight, because the slowest LED PWM will still annoy you, and you're looking for faster PWM or current-limited. | |||

But it's often not specced very well - so whether any particular monitor is better or worse for eyestrain is essentially not specced. | |||

--> | |||

=====On updating pixels===== | |||

<!-- | |||

To recap, '''in TVs and CRT monitors''', there is a narrow stream of electrons steered across the screen, making small bits of phosphor glow, one line at a time {{comment|(the line nature is not the only way to use a CRT, see e.g. [https://en.wikipedia.org/wiki/Vector_monitor vector monitors], and CRT oscilloscopes are also interesting, but it's a good way to do a generic display)}} | |||

''' | So yes, a TV and CRT monitor is essentially updated one pixel at a time, which happens so fast you would need a ''very'' fast camera | ||

to notice this - see e.g. [https://youtu.be/3BJU2drrtCM?t=52]. | |||

This means that there needs to be something that controls the left-to-right and top-to-bottom steering[https://youtu.be/l4UgZBs7ZGo?t=307] - and because you're really just bending it back and forth, there are also times at which that bending shouldn't be visible, which is solved by just not emitting electrons, called the blanking intervals. If you didn't have horizontal blanking interval between lines, you would see nearly-horizontal lines as it gets dragged back for the next line; if you didn't have vertical blanking interval between frames you would see a diagonal line while it gets dragged back to the start of the next frame. | |||

In CRTs monitors, '''hsync''' and '''vsync''' names signals that (not control it directly but) help that movement happen. | |||

and | |||

CRTs were driven relatively directly from the graphics card, in the sense that the values of the pixel we're beaming onto a pixel will be the value that is on the line ''at that very moment''. | |||

It would be hard and expensive to do any buffering, and there would be no reason (the phosphor's short term persistance is a buffer of sorts). | |||

So there needs to be a precisely timed stream of pixel data that is passed to the phosphors, | |||

and you spend most of the interval drawing pixels (all minus the blanking parts).}} | |||

'''How are CRT monitors different from CRT TVs?''' | |||

In TVs, redraw speeds were basically set in stone, as were some decoding details. | |||

It was still synchronized from the signal, but the speed was basically fixed, as that made things easier. | |||

On color TV there were some extra details, but a good deal worked the same way. | |||

Early game consoles/computers just generated a TV signal, so that you could use the TV you already had. | |||

which | After that, CRT monitors started out as adapted CRT TVs. | ||

Yet we were not tied to the broadcast format, | |||

so it didn't take long at all before speed at which things are drawn was configurable. | |||

By the nineties it wasn't too unusual to drive a CRT monitor at 56, 60, 72, 75, perhaps 85, and sometimes 90{{verify}}, 100, or 120Hz. | |||

We also grew an increasing amount of resolutions that the monitor should be capable of displaying. | |||

Or rather, resolution-refresh combinations. Detecting and dealing that is a topic in and of itself. | |||

Yet at the CRT level, they were driven much the same way - | |||

synchronization timing to let the monitor know when and how fast to sweep the beams around, | |||

and a stream of pixels passed through as they arrive on the wires. | |||

So a bunch of the TV mechanism lived on into CRT monitors - and even into the flatscreen era. | |||

That means that at, say, 60fps, roughly 16.6 milliseconds per frame, | |||

''most'' of that 16ms is spent moving values onto the wire and onto the screen. | |||

''' | '''How are flatscreens different from CRTs?''' | ||

The physical means of display is completely different. | |||

There is a constant backlight, and from the point of view of a single LCD pixel, | |||

the crystal's blocking-or-not state will sit around until asked to change. | |||

And yet the sending-pixels part is still much the same. | |||

Consider that a ''single'' frame is millions of numbers (e.g. 1920 * 1080 * 3 colors ~= 6 million). | |||

For PCs with color this was never much under a million. | |||

Regardless of how many colors, | |||

actually just transferring that many individual values will take some time. | |||

The hsync and vsync signals still exist, | |||

though LCDs are often a little more forgiving, apparently keeping a short memory and allowing some syncing [https://youtu.be/muuhgrige5Q?t=425] | |||

Does a monitor have a framebuffer and then update everything at once? | |||

but | It ''could'' be designed that way if there was a point, but there rarely is. | ||

It would only make things more expensive for no reason. | |||

When the only thing we are required to do is to finish drawing one image before the next starts, | |||

then we can spend most of that time sending pixels, | |||

and the screen, while it has more flexibility in ''how'' exactly, can spend most of the refresh interval updating pixels. | |||

...basically as in the CRT days. | |||

And when we were using VGA on flatscreens, that was what we were doing. | |||

Panels even tend to update a line at a time. | |||

Why? | |||

: the ability to update specific pixels would require a lot more wiring - a per-line addressing is already a good amount of wires (there is a similar tradeoff in camera image sensor readout, but in the other direction) | |||

: LCDs need to be refreshed somewhat like DRAM (LCD doesn't like being held at a constant voltage, so monitors apply the pixel voltage in alternating polarities each frame. (This is not something you really need to worry about. [http://www.techmind.org/lcd/index.html#inversion It needs an ''extremely'' specifc image to see])). | |||

Scanout itself basically refers to the readout and transfer of the framebuffer on the PC side, | |||

'''Scanout lag''' can refer to | |||

: | : the per-line update | ||

: | : the lag in pixel change, GtG stuff | ||

: | |||

You can find some high speed footage of a monitor updating, e.g. [https://blurbusters.com/understanding-display-scanout-lag-with-high-speed-video here] | |||

which illustrates terms like [[GtG]] (and why they both are and are not a sales trick): | |||

Even if a lines's values are updated in well under a millisecond, the pixels may need ~5ms to settle on their new color, | |||

and seen at high speeds, this looks like a range of the screen is a blur between the old and new image. | |||

'''Would it not be technically possible to update all this-many million pixels at the same time? ''' | |||

In theory yes, but even if that didn't imply an insane amount of wires (it does), it may not be worth it. | |||

Transferring millions of numbers takes time, meaning that to update everything at once you need to wait until you've stored all of it, | |||

and you've gained nothing in terms of speed. | |||

You're arguably losing speed because instead of updating lines as the data comes in, you're choosing to wait until you have it all. | |||

You would also need to get communication that can go so much faster that it moves a frame multiples faster and sits idle most of the time. | |||

- | That too is possible - but would only drive up cost. | ||

''' | The per-line tradeoff described above makes much more sense for multiple reasons. | ||

Do things ''more or less'' live, | |||

but do it roughly one line at a time - we can do it ''sooner'', | |||

it requires less storage, | |||

we can do it over dozens to hundreds of lines to the actual panel. | |||

'''If you can address lines at a time, you could you do partial updates this way?''' | |||

Yes. And that exists. | |||

But it turns out there are few cases where this tradeoff is actually useful. | |||

And now you also need some way to keep track of what parts (not) to update, | |||

which tends to mean either a very simple UI, or an extra framebuffer | |||

e.g. Memory LCD is somewhat like e-paper, but it's 1-bit, mostly gets used in status displays{{verify}}, | |||

Around games and TVs it is rare that less than the whole screen changes, | |||

Some browser/office things are mostly static - except when you scroll. | |||

}} | |||

''' 'What to draw' influenced this development too''' | |||

When in particular the earliest gaming consoles were made, RAM was expensive. | |||

A graphics chip (and sometimes just the CPU) could draw something as RAM-light as sprites, if it was aware of where resolution-wise it was drawing right now. | |||

It has to be said there were some ''very'' clever ways those were abused over time, but at the same time, this was also why the earliest gaming had limits like 'how many sprites could be on screen at once and maybe not on the same horizontal lines without things going weird'. | |||

In particular PCs soon switched to framebuffers, meaning "a screenful of pixels on the PC side that you draw into", and the graphics card got a dedicated to sending that onto a wire (a [https://en.wikipedia.org/wiki/RAMDAC RAMDAC], basically short for 'hardware that you point at a framebuffer and spits out voltages, one for each pixel color at a time'). This meant we could draw anything, meant we had more time to do the actual drawing, and made higher resolutions a little more practical (if initially still limited by RAM cost). In fact, VGA as a connector carries very little more than hsync, vsync, and "the current pixel's r,g,b", mostly just handled by the RAMDAC. | |||

======Screen tearing and vsync====== | |||

'''When to draw''' | |||

'' | So, the graphics card has the job of sending 'start new frame' and the stream of pixels. | ||

You ''could'' just draw into the framebuffer whenever, | |||

but if that bears no relation to when the graphics card sends new frames, | |||

it would happen ''very easily'' that you are drawing into the framebuffer while the video card is sending it to the screen, | |||

and would shown a half-drawn image. | |||

While in theory a program could learn the time at which to ''not'' draw, it turns out that's relatively little of all the time you have. | |||

One of the simplest ways around that is '''double buffering''': | |||

: have one image that the graphics card is currently showing, | |||

: have one hidden next one that you are drawing to | |||

: tell the graphics card to switch to the other when you are done | |||

This also means the program doesn't really need to learn this hardware schedule at all: | |||

Whenever you are done drawing, you tell the graphics card to flip to the other one. | |||

How do we keep it in step? Varies, actually, due to some other practical details. | |||

Roughly speaking, | |||

: vsync on means "wait to flip the buffers until you are between frames" | |||

: vsync off means "show new content as soon as possible, I don't care about tearing", | |||

'' | In the latter case, you will often see part of two different images. They will now always be ''completely drawn'' images, | ||

and they will usually resemble each other, | |||

but on fast moving things you will just about see the fact that there was a cut point for a split second. | |||

If the timing of drawing and monitor draw is unrelated, this will be at an unpredictable position. | |||

This is arguably a feature, because if this happens regularly (and it would), it happens at different positions all the time, | |||

which is less visible than if they are very near each other (it would seem to slowly move). | |||

Gamers may see vsync as a framerate cap, but that's mainly just because it would be entirely pointless to render more frames than you can show, | |||

(unless it's winter and you are using your GPU as a heater). | |||

---- | |||

'''Does that mean it's updating pixels while you're watching rather than all at once?''' | |||

Yes. | |||

Due to the framerate alone it's still fast enough that you wouldn't notice. | |||

As that slow mo video linked above points out, you don't even notice that your phone screen is probably updating sideways. | |||

Note that while in VGA, the pixel update is fixed by the PC, | |||

in the digital era we are a little less tied. | |||

In | In theory, we could send the new image ''much'' faster than the speed at which the monitor can update itself, | ||

but in practice, you don't really gain anything by doing so. | |||

'''Does that mean it takes time from the first pixel change to the last pixel change within a frame? Like, over multiple microseconds?''' | |||

Yes. Except it's over multiple ''milliseconds''. | |||

Just how many milliseconds varies, with the exact way the panel works, but AFAICT not a lot. | |||

I just used some phototransistors to measure that one of my (HDMI) monitors at 60Hz takes approximately 14ms to get from the top to the bottom. | |||

--- | |||

There would be little point to keeping a coping of an entire screen, since we're reading from a framebuffer just as before. | |||

It would in fact be simpler and more immediate to just tell the LCD controller where that framebuffer is, and what format it is in. | |||

Note that while this is different mechanism, it has almost no visible effect on tearing. | |||

(on-screen displays seem like they draw on top of a framebuffer, particularly when they are transparent, but this can be done on the fly) | |||

https://www.nxp.com/docs/en/application-note/AN3606.pdf | |||

'''Are flatscreen TVs any different?''' | |||

Depends. | |||

Frequently yes, because a lot are sold with some extra Fancy Processing&tm;, like interpolation in resolution and/or time, | |||

which by the nature of these features ''must'' have some amount of framebuffer. | |||

This means the TV could be roughly seen as a PC with a capture card: it keeps image data around, then does some processing before it | |||

gets sent to the display (which happens to physically be in the same bit of plastic)) | |||

And that tends to add a few to a few dozen milliseconds. | |||

Which doesn't matter to roughly ''anything'' in TV broadcast. There was a time at which different houses would cheer at different times for the same sports goal, but ''both'' were much further away from the actual event than to each other. It just doesn't matter. | |||

So if they having a "gaming mode", that usually means "disable that processing". | |||

'''Are there other ways of updating?''' | |||

Yes. Phones have been both gearhead country and battery-motivated territory for a while | |||

so have also started going 120Hz or whatnot. | |||

At the same time, they may figure out that you're staring at static text, | |||

so they may refresh the framebuffer and/or screen ''much'' less often. | |||

'''Is OLED different?''' | |||

No and yes. | |||

And it seems it's not so much differen ''because'' it's OLED specifically, | |||

but because these new things correlated with a time of new design goals and new experiments{{verify}} | |||

https://www.youtube.com/watch?v=n2Qj40zuZQ4 | |||

--> | |||

=====On pixel response time and blur===== | |||

<!-- | |||

So, each pixel cannot change its state instantly. It's on the order of a few milliseconds. | |||

There are various metrics, like | |||

* GtG (Gray To Gray) | |||

* BwB (black-white-black) | |||

* WbW (white-black-white) | |||

None of these are standardized, vendors measure them differently, and you can assume as optimistically as possible for their specs page, so these are not very comparable. | |||

( | This also implies that a given framerte will look slightly different on different monitors - because pixels having to change more than they can looks like blur. | ||

: this matters more to high contrast (argument for BwB, WbW) | |||

: but most of most images isn't high contrast (argument for GtG) | |||

One way to work around this, on a technical level, is higher framerate. | |||

''Display'' framerate, that is{{verify}}. | |||

On paper this works, but it is much less studied and less clear to which degree this is just the current sales trick, versus to what degree it actually looks better. | |||

In fact, GtG seems by nature a sales trick. Yes there's a point to it because it's a curve, but at the same time it's a figure that is easily half of the other two, that you can slap on your box. | |||

It's also mentioned because it's one possible factor in tracking. | |||

--> | |||

====Vsync==== | |||

<!-- | |||

What vsync adds | |||

As the above notes, we push pixels to the framebuffer as fast as we can, a monitor just shows what it gets (at what is almost always a fixed framerate = fixed schedule). | |||

''' | ''Roughly'' speaking, every 16ms, the video card signals it's sending a new frame, and sends whatever data is in its framebuffer as it sends it. | ||

And it has no inherent reason to care whatever else is happening. | |||

So if you changed that framebuffer while it was being sent out, the from the perspective of entire frames, it didn't sent full, individual ones. | |||

: It is possible that it sent part of the previous and part of a next frame. | |||

: It is possible that it sent something that looks wrong because it wasn't fully done drawing (this further depends a little on ''how'' you are drawing) | |||

It's lowest latency to get things on screen, but can look incorrect. | |||

There are two common ingredients to the solution: | |||

* vsync in the sense of waiting until we are between frames | |||

:: The above describes the situation without vsync, or with vsync off. This is the most immediate, "whenever you want" / "as fast as possible" way. | |||

:: '''With vsync on''', the change of the image to send from is closely tied to when we tell/know the monitor is between frames. | |||

:: It usually amounts to the software ''waiting'' until the graphics card is between frames. That doesn't necessarily solve all the issues, though. In theory you could do all your drawing in that inbetween time, but it's not a lot of time. | |||

* double buffering | |||

:: means we have two framebuffers: one that is currently being sent to the screen, and one we are currently drawing to | |||

:: and at some time we tell the GPU to switch between the two | |||

Double buffering with vsync off means ''switch as soon as we're done drawing''. | |||

It solves the incomplete-drawing problem, and is fast in the sense that it otherwise doesn't wait to get things on screen. | |||

But we still allow that to be in the middle of the monitor pixels being sent out, so it can switch images in the middle of a frame. | |||

If those software flips are at a time unrelated to hardware, and/or images were very similar anyway (slow moving game, non-moving office document), | |||

then this discontinuity is ''usually'' barely visible, because either the frames tend to look very similar, | |||

or it happens at random positions on the screen, or both. | |||

It is only when this split-of-sorts happens is in a similar place (fast movement, regular and related update schedule, which can come from rendering a lot faster than monitor) that it is consistent enough to notice, and we call it '''screen tearing''', because it looks something like a torn photo taped back together without care to align it. Screen tearing is mostly visible with video and with games, and mostly with horizontal motion (because of the way most the update is by line). | |||

It's still absolutely there in more boring PC use, but it's just less visible because what you are showing is a lot more static. | |||

Also the direction helps - I put one one my monitors vertical, and the effect is visible when I scroll webpages because this works out as a broadly visible skew, more noticeable than taller/shorter letters that I'm not reading at that moment anyway. | |||

So the most proper choice is vsync on ''and'' '''double buffering'''. With this combination: | |||

* we have two framebuffers that we flip between | |||

* we flip them when the video card is between frames | |||

''' | '''vsync is also rate limiting. And in a integer division way.''' | ||

Another benefit to vsync on is that we don't try to render faster than necessary. | |||

60Hz screen? We will be sending out 60 images per second. | |||

...well, ''up to'' 60. Because we wait until a new frame, that means a very regular schedule that we have no immediate control over. | |||

At 60Hz, you need to finish a new frame within 16.67ms (1 second / 60). | |||

Did you take 16.8ms? Then you missed it, and the previous frame will stay on screen for another 16.67ms. | |||

For example, 60Hz will mean that if | |||

: 16.6ms (60fps) | |||

: 1 frame late means it has to come 33.3ms after the start instead, which you could say is momentarily 30fps (60/2) | |||

: 2 frames late is 50ms later, for 20fps (60/3) | |||

: 3 frames late is 66ms later, for 15fps (60/4) | |||

: ...and so on, but we're already in "looks terrible" territory. | |||

So assuming things are a little late, do we get locked into 30fps instead? | |||

Well, no. Or rather, that depends on whether you are CPU-bound or GPU-bound. | |||

If the GPU is a little too slow to get each frame in on time, then chances are it's closer to 30fps. | |||

If the GPU is fast enough but the CPU | |||

The difference exists in part because the GPU not having enough time is a ''relatively'' fixed problem | |||

because | |||

it mostly just has one job (of relatively-slowly varying complexity) | |||

and drawing the next frame is often timed since the last | |||

while | |||

the CPU being late is often more variable, | |||

but even if it weren't and it's on its own rate, | |||

And even that varies on the way the API works. | |||

: OpenGL and D3D11 - GPU won't start rendering until vsync releases a buffer to render ''into'' {{verify}} | |||

: Vulkan and D3D12 - such backpressure has to be done explicitly and differently {{verify}} | |||

(side note: A game's FPS counter indicating only the interval of the last frame would update too quickly to see, so FPS counters tend to show a recent average) | |||

vsync means updatesg only happen at multiples of that basic time interval, | |||

meaning immediate framrerates that are integer divisions of the native rate. | |||

To games, vsync is sort of bad, in that if you ''structurally'' take more than 16.8ms aiming for 60Hz, | |||

you will be running at a solid 30fps, not something inbetween 30 and 60. | |||

When you ''could'' be rendering at 50 and showing most of that information, | |||

there are games where that immediacy is worth it regardless of the tearing. | |||

For gamers, vsync is only worth it if you can keep the render solidly at the monitor rate. | |||

Notes: | |||

: vsync this is not the same mechanism as double buffering, but it is certainly related | |||

'''Related things''' | |||

Pointing a camera at a screen gives the same issue, because that camera will often be running at a different rate. | |||

Actually, the closer it is, the slower the tearing will seem to move - which is actually more visible. | |||

This | This was more visible on CRTs because the falloff of the phosphor brightness (a bunch of it in 1-2ms) is more visible the shorter the camera's exposure time was. There are new reasons that make this visible in other situations, like [[rolling shutter]]. | ||

''' | Screen capture, relevant in these game-streaming days, may be affected by vsync in the same way. | ||

It's basically a ''third'' party looking at the same frame data, so another thing to ''independently'' have such tearing and/or slowdown issues. | |||

====Vsync==== | |||

Intuitively, vsync tells the GPU to wait until refresh. | |||

This is | This is slightly imprecise, in a way that matters later. | ||

What it's really doing is telling the GPU to flip buffers until the next refresh. | |||

In theory, we could still be rendering multiples more frames, | |||

and only putting out some to the monitor. | |||

But there is typically no good reason to do so, | |||

so enabling vsync usually comes with reducing the render rate to the refresh rate. | |||

'' | Similarly, disabling vsync does not ''necessarily'' uncap your framerate, because developers may | ||

have other reasons to throttle frames. | |||

'''Vsync when the frames come too fast''' is a fairly easy decision, | |||

because we only tell the GPU to go no faster than the screen's refresh rate. | |||

The idea being that there's no point in trying to have things to draw faster than we can draw them. | |||

It just means more heat drawing things we mostly don't see. | |||

In this case, with vsync off you may see things ''slightly'' earlier, | |||

But it can be somewhat visually distracting, and the difference in timing may not be enough to matter. | |||

Unless you're doing competition, you may value aesthetics more than reaction. | |||

'''Vsync when the frames come in slower than the monitor refresh rate''' is another matter. | |||

Vsync still means the same thing -- "GPU, wait to display an image at monitor refresh time". | |||

But note that slower-than-refresh rendering means we are typically too late for a refresh, | |||

so this will make it wait even longer. | |||

Say, if we have a 60Hz screen (16.7ms interval) and 50fps rendering (20ms interval), | |||

* if you start a new frame render after the previous one got displayed, you will miss every second frame, and be displaying at 30fps. | |||

* if you render independently, then we will only occasionally be late, and leave a frame on screen for two intervals rather than one | |||

You can work this out on paper, but it comes down to that it will run at an integer divisor of the framerate. | |||

So if rendering dips a little below 60fps, it's now displaying at 30fps (and usually only that. Yes, the next divisors are 20, 15, 12, 10, but below 30fps people will whine ''regardless' vsync. Also, the difference is lower). This is one decent reason gamers dislike vsync, and one decent reason to aim for 60fps and more - even if you don't get it, your life will be predictably okay. | |||

And depending on how much lower, then the sluggishness may be more visible than a little screen tearing. | |||

This also seems like an unnecessary slowdown | |||

Say a game is aiming at 60fps and usually ''almost'' managing. You might prefer vsync off. | |||

Say | |||

TODO: read [https://hardforum.com/threads/how-vsync-works-and-why-people-loathe-it.928593/] | |||

'''Adaptive VSync''' | |||

If the fps is higher than the refresh, VSync is enabled, | |||

if the fps is lower than the monitor refresh, it's disabled. | |||

This avoids | |||

'''NVidia Fast Sync''' / '''AMD Enhanced Sync''' | |||

Adaptive VSync that adds triple buffering to pick. | |||

Useful when you have more than enough GPU power. | |||

Note that with vsync on, whenever you are late for a frame at the monitor's framerate, you really just leave the image on an extra interval. Or two. | |||

This is why it divides the framerate by integer multiples. If on a 60Hz monitor it stays on for one frame it's 60fps, for two frames it's 30fps, for three frames it's 20fps, for four frames it's 15fps, etc. | |||

When with vsync on that FPS counter says something like 41fps, it really means "the average over tha last bunch of frames was a mix of updates happening at 16ms/60fps intervals, but more happening at 32/30fps" (and maybe occasionally lower). | |||

'''Could it get any more complex?''' | |||

Oh, absolutely! | |||

'''I didn-''' | |||

...you see, the GPU is great at independently drawing complex things from relatively simple descriptions, but something still needs to tell it to that. | |||

And a game will have a bunch of, well, game logic, and often physics that needs to be calculated before we're down to purely ''visual'' calculations. | |||

Point is that with that involved, you can now also be CPU-limited. Maybe the GPU can ''draw'' what it gets at 200fps but the CPU can only figure out new things to draw at around 40fps. Which means a mix of 60 and 30fps as just mentioned. And your GPU won't get very warm because it's idling most of the time. | |||

Games try to avoid this, but [some people take that as a challenge]. | |||

--> | |||

====Adaptive sync==== | |||

<!-- | |||

{{stub}} | |||

For context, most monitors keep a regular schedule (and set it themselves{{verify}}). | |||

Nvidia G-sync and AMD Freesync treats frame length them as varying. | |||

Basically, the GPU sending a new frame will make the monitor start updating it as it comes in, | |||

rather than on the next planned interval. | |||

Manufacturer marketing as well as reviewers seem very willing to imply | |||

: vague "increases input lag dramatically" and the way they say it is often just ''wrong'', or | |||

: "do you want to solve tearing ''without'' the framerate cap that vsync implies" and it's a pity that's pointing out the ''wrong'' issue about vsync, | |||

: "G-Sync has better quality than FreeSync", whatever that means | |||

A more honest way of putting it might be | |||

"when frames come in slower than the monitor's maximum, | |||

we go to the actual render rate, without tearing" | |||

...rather than the integer-division ''below'' the actual render rate (if you have vsync on) | |||

or sort of the actual render rate but with tearing (with vsync on). | |||

There's absolutely value to that, but dramatic? Not very. | |||

Note that doing this requires both a GPU and monitor that support this. | |||

Which so far, for monitors, is a gamer niche. | |||

And the two mentioned buzzwords are not compatible with each other. | |||

For some reason, most articles don't get much further than | |||

"yeah G-sync is fancier and more expensive and freesync is cheaper and good enough (unless you fall under 30fps which you want to avoid anyway)" | |||

...and tend to skip ''why'' (possibly related that the explanation behind stuttering is often a confused ''mess''). | |||

Note that while it can show frames as soon as your GPU has them, it doesn't go beyond a maximum rate. | |||

So that Hz rate is essentially its ''maximum'', and it will frequently go slower. | |||

It seems to mean that the PC can instruct the monitor to start a new frame whenever it wants. | |||

The monitor's refresh rate is now effectively the ''maximum''. | |||

This in particular avoids the 'unnecessary further drop when frames come in slower than the monitor refresh rate' | |||

FreeSync uses DP(1.2a?)'s existing Adaptive-Sync instead of something proprietary like GSync. | |||

It will also work over HDMI when it supports VRR, which as officially added in HDMI 2.1 (2017) but there were supporting devices before then. | |||

It means that any monitor could choose to support it with the same components, which means both that FreeSync monitors tend to be cheaper than G-Sync, | |||

but also that there is more variation of what a given FreeSync implementation actually gives you. | |||

Say, these monitors may have a relatively narrow range in which they are adaptive | |||

: below that minimum, ''both'' will have trouble (but apparently FreeSync is more visible?) | |||

G-Sync apparently won't go below 30Hz which seems to mean your typical vsync-styl integer reduction | |||

--> | |||

===On perceiving=== | |||

====The framerate of our eyes==== | |||

<!-- | |||

This is actually one of the hardest things to start with, because biology makes this rather interesting. | |||

So hard to quantify, or summarize at all - there's a ''lot'' of research out there. | |||

The human flicker fusion threshold, often quoted as 'around 40Hz', actually varies with a bunch of things - the average brightness, the amount of difference in brightness, the place on your retina, fatigue, the color/frequency, the chemistry of your retina, and more. | |||

It varies even with what you're trying to see, because it also differs between rods (brightness sensitivity, and more resolution) where it seems to ''start'' dropping above ~15Hz / ~60ms, whereas for cones (color sensitivity, lower resolution) it drops above 60Hz / ~30ms. Which is roughly why peripheral vision reacts faster but less precisely. | |||

Also, while each cone might be limited to order of 75fps{{verify}} (which seems to be an overall biochemical limit), | |||

the fact that we have an absolute ''ton'' of them amounts to more, but it's hard to say how much exactly? | |||

Take all of those together, and you can estimate it with a curve that does a gradual drop somewhere, at a place and speed that varies with context. | |||

For example, staring at a monitor | |||

: it is primarily the center of vision that applies (slower than peripheral) | |||

: it's fairly bright, so you're on the faster end. | |||

Can you perceive things that are shorter in time? | |||

When you flash a momentary image at a person, they can tell you something about that image once it's longer than 15ms or so[citation needed]. Not everyone sees that, but some do. In itself, seems to argue that over ~70fps has little to no added benefit (to parsing frame-unique information). | |||

In theory you can go much further, though not necessarily in ways that matter. | |||

Like camera sensors, our eyes effectively collect energy. Unlike cameras, we do not have shutters, so all energy gets counted, no matter when it came in. | |||

As such, an extremely bright nanosecond flash, a moderate 1ms flash, and a dim 30ms flash are perceived as more or less the same.[citation needed] | |||

While interesting, this doesn't tell us much. You ''can't'' take this and say our eyes are 1Gfps or 1000fps or 30fps. | |||

The only thing it really suggests is that the integration time is fairly slow - variable, actually, which is why light level is a factor. | |||

So generally it seems that it's quickly diminishing returns between 30 and 60fps. It's not that we can't go higher, it's that the increase in everything you need to produce it is worth it less and less. | |||

'''Are you saying 30fps is enough for everything?''' | |||

It's enough information for most everyday tasks, and certainly dealing with slower motion, yes. | |||

It's not hard to manufacture an example that looks smoother at 60fps, though, particularly with some fast-moving high-contrast things. Which certainly includes some game scenes. | |||

So aiming for 60fps can't hurt. | |||

It may not always let you play much better, but it still feels a bit smoother. | |||

'''Smoothness''' | |||

When focusing in individual details, things look recognisably smoother when moving up, jumping to 20, 30, 40fps. Steps up to 50, 60, 70fps are subtler but still perceivable. | |||

Movies are often 24fps (historically down to half of that), and many new images movies ''still are'', even though sports broadcasts and home video had been doing 50/60fps for a long time (if interlaced). | |||

In fact, for a while moviegoers preferred the calmness of the sluggish 24fps. | |||

There's a theory and there's a that we associated 50/60 with cheap, | |||

roughly because of early home video, | |||

also easily jerkier because motion stabilizing wasn't a thing in that yet. | |||

'''Reaction time''' | |||

If you include your brain and actually parsing what you see. The total visual reaction timeof comprehending a new thing you see is ~250ms, down to ~200ms at the absolute best of times. | |||

{{comment|(And that's not even counting a decision or moving your hands to click that mouse)}} | |||

If on the other hand you are expecting something, the main limit is your retina's signalling. | |||

And if, say, someone is moving in a straight line, you can even estimate ahead of time when they hit a spot, rather than wait for it to happen. | |||

'''Tracking''' | |||

Vision research does tell us that more complex tasks, even just tracking a single object, the use of extra frames starts dropping above 40fps. | |||

Also, the more complex a scene is, the less of it you will be able to parse, and we effectively start filling in assumptions instead. | |||

And even for simple things we mostly just assume that they're placed in the intermediate positions (which as assumptions go goes a long way), but the amount of jumping between them is noticeable up to a point, because e.g. moving 20cm/s of screen space at 24fps is ~1cm each frame, and at 60fps is ~0.3cm. | |||

Motion blur helps guides us and makes that jump much less noticeable, so that 24fps+blur can look like twice that. It's probably more useful at lower framerates than higher, though. | |||

'''What is your tracking task, really?''' | |||

That 20cm/s example is not what many games show. | |||

As further indication of "if you want to notice everything", when speed-reading you move your eyes more often to see more words, but above ten times (or so) per second can you no longer process a fairly full eyeful of information fast enough. | |||

But FPS gaming is usually either about | |||

noticing a whole new scene (e.g. after a fast twichy 180), or | |||

tracking a slow moving object for precisely timed shot, which is roughly the opposite of looking at everything. | |||

The first is which is largely limited by your brain - you don't really interpret a whole new scene in under 17ms (of 60fps). | |||

From another angle, we seem to see an image for at least ~15ms or so before we can parse what is in it. | |||

While that's for an isolated scene flashed at you (and in games you are usually staring at something smaller) it's still a decent indicator that more than 65fps or so probably doesn't help much. | |||

In a scene that is largely still, or that you are tracking overall, it is likely you could focus on the important thing, and push the limits of what extra information extra frames might give. | |||

You can make make arguments for not only 60fps but a few multiples higher -- but only for things so specifically engineered to maximize that figure that that it really doesn't apply to games. | |||

You don't really use them to track (you don't need them to track), | |||

but it looks more pleasing when it seems to be a little more regular. | |||

'' | Notes: | ||

* It seems that around eye [https://en.wikipedia.org/wiki/Saccade saccades] you can perceive ''irregularity'' in flicker a few multiples faster than you would otherwise}} | |||

--> | |||

===arguments for 60fps / 60Hz in gaming=== | |||

<!-- | |||

tl;dr: | |||

* a smooth 60fps looks a ''little'' smoother than, say, 30fps | |||

:: and if you can guarantee means "looks good, no worries about it" which is worth some money | |||

* yet a ''stable'' framerate may be more important than the exact figure | |||

* ...particularly with vsync on - a topic still misunderstood | |||

: and why people like me actually complain a little too much | |||

* ...so if you get a stable 60+fps it will feel a little smoother | |||

:: But there is a small bucket of footnotes to that. | |||

: A solid 60 is going to look better than a solid 30 | |||

: yet a solid 30 is less annoying than an unpredictable 60. | |||

:: more so with vsync on | |||

::: because that's actually a mix of 30 and 60 | |||

::: unless it's always enough and it's actually a solid 30 | |||

* the difference in how it plays is tiny for almost all cases | |||

:: but if it's your career, you care. | |||

:: and if it truly affects comfort, you care | |||

There's a bunch of conflation that actually hinders clear conclusions here. Let's try to minimize that. | |||

To get some things out of the way: | |||

'''60fps and 60Hz are two different things entirely.''' | |||

In the CRT days as well as in the flatscreen days, fps and Hz are not quite tied. | |||

In the CRT days there was an extra reason you might would care separately about Hz - see [[#Reproduction that flashes]] above. | |||

''' | '''why do 24fps movies look okay, and 24fps game look terrible?''' | ||

A number of different things help 24fps movies look okay. | |||

Including, but not limited to, | |||

which | : a fully fixed framerate | ||

: optical expectations | |||

: expectation in general | |||

: typical amount of movement - which is ''lower'' in most movies | |||

: the typical amount of visual information | |||

The last two are guided by cinematic languge - it suits most storytelling to not have too much happening most of the time, | |||

and to have ''mostly'' slow movement. | |||

It helps us parse most of what's happening, which is often the point, at least in most movies. | |||

Sure, this partly because movies have adapted to this restriction {{comment|(and an old one at that - 24fps was settled on in the 1930s or so, just as an average of the varying speeds from very early film that made it harder to show film)}}, but is partly true regardless. | |||

In | {{comment}(Frame rate was also once directly proportional to cost - faster speeds meant proportionally more money spent on film stock. In the digital era this is much less relevant)}} | ||

In particular, a moderately fast pan doesn't look great in 24fps, so it's often either a slow cinematic one, or a fast blur meant to signify a scene change. {{comment|(side note: Americans watching movies on analog TV had it worse - the way 24fps was turned into the fixed 30fps of TV (see [[telecining]]), which makes pans look more stuttery than in the cinema)}} | |||

And no, movies at 24fps don't always look great - a 24fps action scene does ''not'' look quite as good as good as a higher speed one. | |||