Data logging and graphing

| Linux-related notes

Shell, admin, and both:

|

RRDtool notes

Introduction

RRDtool mostly consists of

- tools that store values into a round robin database

- tools to plot that data in images

A 'round robin database' means

- a fixed amount of samples are stored

- all the space needed is allocated up-front and never needs to change

- it keeps a pointer of what is the most recent value, and

- overwrites the oldest value.

It's really just a single array where only the contes change.

It's useful to keep a limited-lenth history of values (often device health and resource use, like cpu use, free space, network load, amount of TCP connections, etc.) in an amount of space that is both compact and never needs to grow.

RRDtool also stores data for strictly regular time intervals, meaning it doesn't need to timestamp each value, saving a little more space.

This makes it great for debugging, particularly on space-limited embedded platforms.

There are packages that build on it, like munin in general, and ganglia on clusters/grids.

Gauges, rates, counts; on summarizing discrete events

More technically

- GAUGE

- For values that can be graphed as they are: instantaneous values, not rates, and probably slow-changing.

- Graphing does not at all involve the concept of rate.

- If you basically want COUNTER, but want to deal with resets of your source values to be stored as UNKNOWN rather than possibility mistaking them for counter wraps or large values, then you may prefer DERIVE with min=0

- COUNTER

- to feed through counters that never decrease -- except at wraparound: at which time the the value is calculated assuming typical wraparound (checking whether it's 32-bit or 64-bit integer overflow)

- entered value is difference with last value(verify)

- max (and sometimes min) is useful to avoid insertion of values you know are impossible

- stored value: per-second rate

- DERIVE

- Basically COUNTER without the only-positive restriction and without the overflow checks

- min and max are useful to avoid insertion of values you know are impossible

- ABSOLUTE

- like COUNTER, but with the implication that every time you hand a value to rrdtool, the counter you read from is reset.

- Useful to avoid counter overflows, and the trouble of figuring out the right value at wraparounds.

More practically

Use GAUGE for values that are instantaneous values

Ideal collection interval depends a bit on how fast things can change.

Say, room temperature changes slowly, and measuring every minute is fine.

CPU temperature changes quickly, and measuring every minute can completely miss transient high temperature

- ...assuming you cared to find them

Things like disk space or amount of open connections typically changes relatively slowish - but might sometimes change quickly.

Unless you can hook into the filesystem/kernel to see every individual change, the best you can do to catch fast changes is poll a bunch faster.

When you fetch data from an incremental counter, use COUNTER/DERIVE/ABSOLUTE

Examples:

- bytes moved by disk

- bytes transferred by network

- (often available as both total and a recent per-second rate)

- blink-per-kWh counter

- liter-of-water tick counter

- webserver visits (depending on how you count/extract that count)

Discrete events and the rate you care to sample them at

Things that count quickly work more or like how you would think.

There is little point to sampling faster than your typical event interval.

- And, for a lot of lazy monitoring, you'll be fine with an order of magnitude slower

- that is: if we sample faster than events typically happen, we may have only pretense of time resolution, which makes rate-per-interval graphs become less meaningful

Consolidation interval still matters in how much you want to pinpoint peaks.

E.g. with network transfer, a 1-minute interval means you cannot tell the difference between [100MB/s for 1sec + 59 seconds of nothing] and [a precise sustained 1.6MB/s for 1 minute]. And if you just care about daily plots, you won't care.

Yet the slower the counts arrive, the more awkward it gets.

For example, I once put a sensor on a water meter. One of those 'dial goes round' things, and I just detected it passing, meaning it only really counted whole liters.

But water isn't used steadily, water is often drawn in small bursts.

A liter-of-water tick is since the last tick, which if you typically draw tiny amounts may be long ago.

When you fill a bucket, you'll get a series of ticks, which is fine. (You can even start considering that taps, showers, and such are often limited to ~3 liters/minute)

But consider You might have used 0.9 liters this morning to make a dozen espressos, and now get a tick for a small splash on your face, but according to the report, you used a liter at splash time.

You can make smooth the plot over a longer time, and you can consolidate over a longer time to basically the same effect.

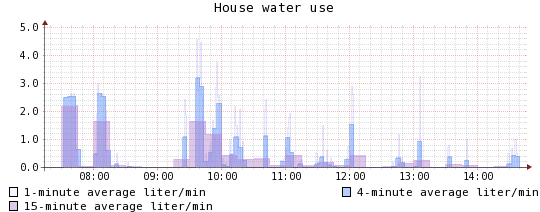

Consider the following plot shows exactly the same data, smeared out in three ways:

The "within 1 minute" is roughly showing the ticks, You can see the ticks, and you can see how you can blur that into the last five or fifteen minutes. It's not pure nonsense, and zoomed out it even helps you grasp rough use, but the more you zoom in, the more misleading this is.

Notes on RRD, DS, RRA

A RRD (round robin database) refers to a .rrd file.

A DS (data source) is the named thing that you send values into (using rrdtool update)

An RRD can contain more than one DS.

An RRA is basically a consolidation log with given settings - and one DS can have multiple RRAs. (why?(verify))

For example, you could do:

DS:cpuload:GAUGE:90:0:100 \ RRA:AVERAGE:0.5:1:1440 \ RRA:AVERAGE:0.5:7:1440 \ RRA:AVERAGE:0.5:30:1440 \ RRA:AVERAGE:0.5:360:1440

The --step value, combined with each RRA's amount-of-samples-to-consolidate value determines how many recent pre-consolidation samples are also kept in the .rrd file. (verify)

In simpler cases you can have one RRA per DS. Usually there are multiple DSes per RRD (different time series, e.g. disk space for each of your disks, the various types of CPU use side by side).

Creating RRDs - 'rrdtool create'

Creates a round robin archive in the named file(==round robin database).

Actually, a single .rrd can contain multiple data sources, and this can be handy if you have several directly related types of data or exact timing is important. I personally prefer a single data source per file, purely because this is slightly easier to manage.

A data source consists of:

- an identifying name

- a type (note: mostly has effect on graphing)

- gauge value (e.g. for temperature; not time-divided when graphed): GAUGE

- incremental (e.g. 'bytes moved by network interface', 'clock ticks spent idling'): COUNTER

- rate (e.g. 'bytes moved the last minute'; assumed to be reset on reading): ABSOLUTE

- the heartbeat, which is an maximum time between incoming values. If exceeded, the value will be stored as 'unknown')

- a minimum and maximum value, as protection against stray erroneous values; if exceeded, 'unknown' will be stored

The round robin archive the data source(s) get recorded in also has to be specified. The information it wants consists of:

- the 'consolidation function' : Essentially a function applied to the bracket of collected data before it is consolidated. This can be used to store several types of statistics on one data source, for example the minimum, maximum, and average, useful eg. when measuring temperature. On simple counters, just 'average' is enough.

- the 'x files factor' : The idea is that since if there are too many unknowns, sometimes the consolidated value should be unknown too (e.g. to avoid spikes because you're averaging over too few values) To make sure values make sense, you can require at least some fraction of the data points in a consolidation interval to not be undefined. The value you should use here actually depends on what you're recording.

Example: hard drive temperature

While rrdtool itself likes to think in amounts, I like to think about it from the other direction, considering:

- how often will I update (15 sec)

- how big is the interval that will be consolidated into (30 sec) - has to be a multiple of the update interval

- how big a backlog do I want, say, 1 year

Say you

- take a value every fifteen seconds (note: so yourself, not via cron)

- want to store a value for every thirty seconds, and

- want to keep a week's worth of data data.

You calculate

- how many data points go into a consolidation

- here 2 (two 15-second values into one consolidated 30-second value)

- how many past consolidated values to store: 2880 half-minutes/day times 7 days/week, i

- is 20160 data points

So for example:

rrdtool create test.rdd --steps=15 DS:test:GAUGE:25:U:U RRA:AVERAGE:0.5:2:20160

The most important numbers in there are the 15, the 2, and the 20160.

In more detail...

- RRA:AVERAGE:0.5:1:20160 means:

- RRA ('round robin archive')

- AVERAGE - The aggregate function, to use in consolidation.

- 0.5 - x files factor

- 2 - steps; how many samples to consolidate

- 20160 - amount of samples

- DS:test:GAUGE:50:U:U means:

- DS ('data source')

- test - the name within the archive. You can create more than one DS in a .rrd archive.

- GAUGE - type; see above. Temperature is a typical gauge value.

- 25 - the heartbeat. I like to have this a bunch of seconds larger than the interval, to avoid UNKNOWNs purely because a script that does a lot of rrdtool updates takes a while. Not strictly the most accurate, but hey.

- U:U - the minimum and maximum values - an attempt to add a value outside this range is considered invalid. Sometimes this is useful to filter out nonsense values that could be generated by a plugin. I tend not to use it.

Graphing - 'rrdtool graph'

Example:

rrdtool graph /var/stat/img/load.png \ -w 200 --title "Load average" -E -l 0 -u 1 -s -86400 \ DEF:o=/var/stat/data/load1.rrd:load1:AVERAGE \ DEF:t=/var/stat/data/load15.rrd:load15:AVERAGE \ CDEF:od=o,100,/ \ CDEF:td=t,100,/ \ AREA:td#99999977:"15-minute" \ LINE1:od#664444:"1-minute"

This shows loading data sources, basic calculating, and different draw types:

- DEF loads a data source from a file, and assigns it a name.

- CDEF is somewhat advanced, but necessary in this case: rrdtool stores integers, so the stored data in this case is 100*(the real real load factor), and here's where you can divide it back. This creates an extra graphable variable. (The expression syntax is reverse polish)

- AREA and LINE are two common ways to graph data. You usually specify what named variable to graph, the color, and the title. The color is hex, and can be RGB or RGBA. The example AREA is somewhat transparent.

Options used here:

- -title "foo" sets the tittle of the graph

- -s -86400 sets how many seconds back to graph from (-86400 is a day)

- -l 0 and -u 1 set (non-rigid) lower and upper bounds on the y scale. Zero and one will be useful for e.g. load average: the axis will scale up to larger values, and will not sometimes show e.g. 200m, 400m, 600m (as in milli) when the largest value is rather small. This can also be useful to e.g. set a memory graph's upper bound to how much RAM you have. Note that to force this range rather than to make it only work when the data lies within that range and not beyond, you also need -r (--rigid). There are other autoscaling alternatives that may fit other uses better.

- -c: specify overall colors, such as -c BACK#ffffff -c SHADEA#eeeeee (see docs for those names)

- -w and -h makes the resulting picture a specific pixel width and height.

- -E: gives prettier lines instead of the truer-to-data blocky ones.

- -b 1024 to make 'kilo' and 'mega' and such be binary-based, not decimal-based, which is useful for accurately showing drive space and such.

Styling

Semi-sorted

Munin notes

Munin wraps a bunch of convenience around rrdtool, and makes it easier to aggregate from many hosts.

A munin setup has one master, and one or more nodes.

- Nodes report data when asked by a master.

- A master asks the nodes for data and creates graphs and such.

In a single-node setup, a computer reports on itself, so that host is node and master. You will probably not care about the fancy networked options in this case.

(A node could also be reporting to multiple masters. This could in theory be useful if you want to generate a report on a workstation, and have a centralized overview of workstations.)

On using plugins

There is a "plugins to actively use" directory (usually /etc/munin/plugins).

Its entries are usually symlinks to larger "plugins you have installed and could use" directory. (location varies, e.g. /usr/share/munin/plugins/ or /usr/libexec/munin/plugins/)

Plugins are picked up after you restart munin-node. (sometimes earlier?(verify))

- Manual setup

If I wanted to use the ntp_offset plugin, I might do:

cd /etc/munin/plugins ln -s /usr/libexec/munin/plugins/ntp_offset ntp_offset

When a plugin name ends with an underscore, the text that follow is an argument.

Often done to ease separate instances of the same plugin script, for example:

Examples:

cd /etc/munin/plugins ln -s /usr/libexec/munin/plugins/ip_ ip_eth0 ln -s /usr/libexec/munin/plugins/ip_ ip_eth1 ln -s /usr/libexec/munin/plugins/ping_ ping_192.168.0.1 ln -s /usr/libexec/munin/plugins/ntp_ ntp_192.168.0.1

Note that other configuration (e.g. database logins) still goes into munin configuration files.

- Semi-automatic setup

You can ask munin to suggest plugins that will probably work. You can get a summary with:

munin-node-configure --suggest | less

I prefer to get this in a more succinct form -- namely the commands that activate the plugin:

munin-node-configure --suggest --shell | less

If you want your own plugin to report itself here, look at the autoconf and suggest parameters. (and magic markers)

Testing and troubleshooting

If running a plugin fails but you don't know why, look at the logs:

tail -F /var/log/munin/*.log

...and see what errors scroll past

- you can wait for the next system run

- or invoke it yourself, something like sudo -u munin munin-cron (probably varies somewhat between systems, and this messes with the sampling somewhat so see below for a subtler variant)

- For example, my apache_volume was broken because I'd set a wrong host in the configuration, and the log showed the timeouts.

If there's no error in logs, you may want to edit the plugin script.

- For example, the hddtemp script does a 2>/dev/null, meaning silent failure on e.g. permission errors.

Sometimes it helps to run a specific plugin.

While you can run it directly, it's more representative if munin runs it, particularly if the problem is permissions or environment, but also because some plugins receive some config from munin itself.

It seems the best check is to ask the munin daemon directly (config and/or fetch), e.g.

echo "config mysql_queries" | netcat localhost 4949 echo "fetch mysql_queries" | netcat localhost 4949

You should see the plugin's config or value output, e.g.:

delete.value 16827 insert.value 15415 replace.value 1201 select.value 1594114 update.value 144234 cache_hits.value 1017162

A decent second choice is to make munin itself run the plugin

sudo -u munin munin-run mysql_queries

If there is any difference between using munin-run and netcat, that points to environment stuff, e.g. PATH or suid or whatnot. Look at the munin's central plugin config file(s).

logs say "sudo: no tty present and no askpass program specified"

See Linux_admin_notes_-_users_and_permissions#no_tty_present_and_no_askpass_program_specified for the general reason, and generally the better fixes.

One way around the issue is to not use sudo, but configure munin to run the plugin as root.

In /etc/munin/plugin-conf.d/munin-node add something like:

[<plugin name>] user <user> group <group>

Not seeing a graph

Usually because there is not a single datapoint stored data yet. (logs will say something like rrdtool graph did not generate the image (make sure there are data to graph))

If it doesn't show up after a couple of minutes, the plugin is probably failing - look at your munin-update.log

Can't exec, permission denied

Possibilities:

- directory not world accessible (by munin)

- lost execute bit

- TODO

On intervals

It seems like:

- the storage granularity is 5 minutes

- the cronjob updating values is typically on a 5-minutes because of it

Recent munin (master since 2.0.0) have per-plugin(-only) update_rate (in seconds), the interval in which it expects values

- note that changing it effectively asks to (delete and) recreate the RRD files.(verify)

Sub-minute is possible but probably means you need something other than cron for the updating.

On writing plugins

A plugin is any executable that reacts at least to being run in the following two ways:

- without argument - the response should be values for the keys that the configuration mentions to exist, like:

total.value 4 other.value 8

- With one argument, config, in which case it should mention how to store and how to graph the series. A simple example:

graph_title Counting things graph_vlabel Amount total.type GAUGE total.label Total other.type GAUGE other.label Other other.info Things that do not fall into other categories other.draw AREA other.colour 336655

The names of series (e.g. the other and total above) must conform to, basically, the regexp [A-Za-z][A-Za-z0-9_]*.

Example plugin I wrote some time:

#!/usr/bin/python

""" reports the amount of logins per user, based on 'w' command,

Always reports all seemingly-real users, so that history won't fall away when they are not logged in.

"""

import sys

import os

import subprocess

def user_count():

counts = {}

# figure out users to always mention (as 0)

passwd = open('/etc/passwd')

for line in passwd:

if line.count(':')!=6:

continue

user,_,uid,gid,name,homedir,shell = line.split(':')

if user in ('nobody',): # often useful to leave in here

counts[user] = 0

continue

if 'false' in shell or 'nologin' in shell:

continue # skip not a user who can log in

if not os.access(homedir,os.F_OK):

continue # skip, homedir does not exist

if '/var' in homedir or '/bin' in homedir or '/usr' in homedir or '/dev' in homedir:

continue # skip, mostly daemons

counts[user] = 0

proc = subprocess.Popen('w --no-header -sui', stdout=subprocess.PIPE, shell=True)

out,_ = proc.communicate()

for line in out.splitlines():

username = line.split()[0]

if username not in counts:

counts[username] = 1

else:

counts[username] += 1

ret=[]

for username in sorted(counts):

ret.append( (username, counts[username]) )

return ret

if len(sys.argv) == 2 and sys.argv[1] == "autoconf":

print "yes"

elif len(sys.argv) == 2 and sys.argv[1] == "config":

print 'graph_title Active logins per user'

print 'graph_vlabel count'

print 'graph_category system'

print 'graph_args -l 0'

for user, count in user_count():

safename = user.encode('hex_codec')

print '_%s.label %s'%(safename,user)

print '_%s.draw LINE1'%(safename) # could do AREA/STACK instead, but this works...

print '_%s.type GAUGE'%(safename)

else: # not config: current values

for user, count in user_count():

print '_%s.value %s'%(user.encode('hex_codec'),count)

Some of the things you can use:

- graph_title

- graph_args - (user-determined) arguments to pass to rrdtool graph - say, --base 1024 -l 0

- graph_vlabel - y-axis label

- graph_scale - (yes or no) Basically, whether to use m, k, M, G prefixes, or just the numbers as-is

- graph_info - basic description

- graph_category - If you can fit into an existing category, use that. Otherwise use other (which is the default if you don't mention it). You can't use arbitrary strings.

- seriesname.type - one of the rrdtool types (GAUGE, ABSOLUTE, COUNTER, DERIVE)

- seriesname.label - label used in graph legend. Seems to be more or less required.

- seriesname.draw - how to draw the series (think LINE, AREA, STACK). Defaults to LINE2(verify)

- seriesname.info - description of series (shown in reports)

- seriesname.colour - what colour to draw it with. By default this is automatic

- seriesname.min - minimum allowed value (insert-time)

- seriesname.max - maximum allowed value (insert-time)

- seriesname.warning - value above which a value should generate a warning

- seriesname.critical - value above which a value is considered critical

- seriesname.cdef - lets you add rrdtool cdefs

- seriesname.negative - (cdef-based?) quick hack to easily mirror a series on the

- seriesname.graph - whether to graph it, yes or no. Can be useful in multigraph.

Notes:

- if you want something to show up in the legend but not the graph, seriesname.type LINE0 may be simplest

- it looks like values for unknown variables are ignored (verify)

- for multiple graphs from one plugin, basically just mention

multigraph name

- as a separator between complete configs - and between the generated value sets.

- When debugging drawing details and you don't want to wait five minutes for the graph to update, run something like: (full path because it's probably not in your PATH. It may be somewhere else.)

sudo -u munin /usr/share/munin/munin-graph

- The introduction mentions that config is read when the plugin is first started.

- It also does so when you tell the service to reload(verify), and it looks like munin-update may do so at every run(verify)

- You can change the config later, adding series (munin will automatically create storage for any new series it sees) and removing series (backing storage will stick around unused), though you probably want to be consistent about the names.

- Note: If series appear and disappear in your config, their history will appear or not depending on whether they were mentioned the last time the images were generated -- which can be confusing.

- For example, I wrote a 'show me the largest directories under a path' plugin, but that meant that any directory that moved would be removed from all graphed history (which also shows more free past space than there really was).

- For other cases you can fix this easily enough, e.g. 'login count per user' can look at /etc/passwd and list everyone who seemingly can log in.

- there are other possible arguments to the plugin executable, such as autoconf and suggest for the "suggest what plugins to install" feature. So don't assume a single argument is config

On keeping some state

On config and environment

Munin's plugin-conf.d/ (usually at /etc/munin/plugin-conf.d/ fixes up any necessary environment for plugins - often primarily centralized configuration.

(note: files are read alphabetically. In case that matters, which it generally doesn't)

It's not unusual to see a lot of stuff in a single file called munin,

and a few not-so-core plugins having their own files.

For syntax, see [1]

The most interesting are probably:

- user (and group): run plugin itself as this user/group

- easy for an admin to change this structurally

- env.something sets that variable in the process's environment

- For example default warning/error levels that apply to all instances

- For example database password - good for multiple instances, and can be useful permission-wise

On the report

To change the categorisation of the graphs, you're probably looking for graph_category

A plugin often doesn't explicitly have it; you can add one. It used to be a fixed set, but since a few years ago you can invent your own categories (verify)

http://munin-monitoring.org/wiki/graph_category_list

The CGI zoom graph will require you to hook in the munin-cgi-graph CGI script.

Assuming

- that script is in /usr/lib/munin/cgi/

- you put munin in its own vhost (relevant to where to mount it, path-wise, below it's on /)

- you have either the FastCGI or more basic CGI module

Then you'll need to add something like

ScriptAlias /munin-cgi/ /usr/lib/munin/cgi/

<Directory "/usr/lib/munin/cgi">

Options +ExecCGI

<IfModule mod_fcgid.c>

SetHandler fcgid-script

</IfModule>

<IfModule !mod_fcgid.c>

SetHandler cgi-script

</IfModule>

</Directory>

<directory /usr/lib/munin/cgi>

Satisfy Any

</directory>

On the CGI (manual-input) graph

If it doesn't work, you've probably done the same thing I did: just placed the html output somewhere it is served.

The CGI graph (munin-cgi-graph), well, needs CGI. Look at: http://munin-monitoring.org/wiki/WorkingApacheCGIConf

The URL path seems to default to /munin-cgi/somethingorother

Ganglia notes

Made to monitor the same things on many compute nodes in a cluster. Built on RRDtool.

http://ganglia.sourceforge.net/

Nagios notes

Grafana notes

Grafana is a web frontend that displays dashboards, mainly geared towards timeseries.

Nice features

- redraws live (5s is fastest by default but you can change that)

- various widget types

- more available via plugins

- can easily vary time aggregation

- easy to change dashboard layout, time, labels, colors, etc.

- It can take data from a dozen different types of stores (e.g. timeseries databases, general-purpose databases)

Limitations:

- not the fastest way to drawing lots of data

- though properly aggregating data and playing with the aggregation intervals works around that, and generally, showing visually simpler data is what you want anyway

- whether fetching over large timespans is fast enough depends on the backend's speed for the given query

- widgets that take something other than time-based data may be more finicky, or not exist

- you can't wildcard-select to create many series (e.g. ping_for_host_* so it'd pick up things as you add them) -- you have to add each manually

- to be fair, in most cases that's a handful and it's not hard to be complete

- but when e.g. you're monitoring hundreds of disks you want shown individually, you need to manually insert them all, and hope you don't miss any

- there are semi-workarounds in

- you can select an aggregate over many fields via regex

- separately auto-generating the .json file, then use import. That's a semi-automated solution, though itself slightly awkward.

- the webpage seems a bit memory-leaky in some browsers(verify)

- enterprise/free versions always make me nervous

Queries

Basic pokery and issues

Graphite notes

Graphite can be seen as an easier and more scalable alternative to rrdtool. (in that it consists largely of round-robin time-series and mostly line graphs)

Written in python. Consists of:

- data collection

- scripts

- can feed in over network with simple syntax

- carbon, which aggregates data

- data collection scripts send their data here

- carbon is responsible for storing it in whisper

- will cache data not yet written (fetchable by the webapp, to help the real-time aspect)

- whisper, a round-robin database

- Much like a rrdtool database, but slightly more flexible, and its IO scales a little better when you want to record a lot of different things (even though it uses a little more CPU time)

- webapp

- Served via Django (which the creator runs under mod_python, but you may like your own way)

- fetches from whisper, and carbon-cache

- calls graphing via Cairo (pycairo)

- user interface with ExtJS

- can use memcached

See also: