Color notes - color spaces

Types of color models

TODO: move this down

There are more color spaces than you can shake a stick at, but there are only a few distinct ideas behind them, and most come from already mentioned systems and effects.

The idea that any colour can be made from three primaries is the tri-chromatic theory (or 'tri-stimulus', a slightly more general term; they are not entirely consistently used, but people (should) tend towards tri-stimulus when the stimuli aren't (necessarily) monochrome lights).

Note that the dimensionality of color perception meaning you need three parameters to model what the eye does, no matter what you base the model in.

The red-green, yellow-blue and light-dark oppositions inherent in the eye is approximately modeled in those systems (they are opposite hues), and more accurately in various systems from CIE, like CIE Lab and CUE Luv.

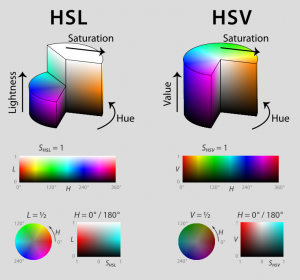

This is quite similar to systems that model the way humans describe colors, which is mostly by tint (general colour type), and often supplemented by dark/brightness and/or the saturation of colours. This is realized in models like HSV/HSB/HSL (same idea, different details and/or number ranges) and slightly differently in HLS.

Video and TV standards like Y'IQ, Y'UV, and Y'CbCr do it a little differently yet, because of historical developments. They split signals into one luminance (brightness) and two chrominance channels, so that back and white TVs would use only luminance, and colour TVs additionally used the chrominance parts of the channels.

This stuck around for a few reasons, one being that we are more sensitive to general brightness noise than to color noise. This means we can favour one or the other in analog signals and - more importantly - in lossy image and video compression.

Humans also commonly compare colours and intensities. Without context, you can identify no more than a few hundred colors. However, give them directly adjacent monochrome colored areas and it turns out we can tell the difference between millions - in a just-noticable-difference sort of way. Interestingly, you can noticably distinguish more shades of green than other colours, though this is sometimes exhaggerated.

Difference measures and human comparison are the motivation behind CIE colour reference systems such as CIE XYZ, refined in later variations and used in definition of other color spaces, such as the more practical CIE Lab. Of course, the eyes are quirky so CIE spaces are defined with conditions and disclaimers, but eye trickery aside, the approximation is quite accurate for most things.

Color management, Gamut

The point of color management is to translate the device-specific numeric values in a way to keep produce colors the same from device to device, so that each device can try to display the color as correctly as it can manage - and particularly that part varies.

Put another way, color corrected images store absolute color references, and each device just tries its best. One important thing to note is that a monitor is also a device. If it is miscalibrated, the way a photo looks (and corrections made by you to make it look good) will not be reproduced elsewhere, in the same way that you having tinted glasses on would make you mis-correct the image.

A related concept is gamut, the name for the range of colors something can produce or receive. The gamut of the human eye is rather larger than the gamut of monitors and printers, for example.

Technically, a gamut is only limited (...more limited than the eye's...) if you define it that way, but you generally need to. In images and most other storage, there's only so much space for each pixel. Consider that defining the same amount of colors over a larger space means the color resolution - the space between each define color - is coarser.. Whether this is actually noticable, or whether it is more or less important than the size of the gamut is arguable, though.

When visualizing the extent of a gamut in some specific color space for reference, a gamut is shown as a shape, often on Lab with just the saturated colors - where most of the variation is. More accurate would be to show Lab at a few intervals of luminance, and show the area covered by a standard / production method at each. This would show more clearly that the saturated Lab image applies more directly to monitors and other light-additive methods as they have an easier job showing bright often saturated colors, while the subtractive mixing of inks makes darker colors likely results and tend to cover less area.

It would also show the variation between printers.

Because color resolution is limited, particularly early color spaces were made for specific purposes, with the intended gamut in mind.

Color resolution is limited; when used in image storage, limited bits per pixel mean that if a the gamut covers more of XYZ, the space between the individually referenced colors is larger. Pro Photo is only available as 16 bits per pixel to avoid this causing effects such as posterization.

This also means that when you're going just for monitor viewing and printing, gamut size doesn't matter as much as you may think. While sRGB is a little tight for print (although it covers many cleaper printers), Adobe RGB comfortably contains most any printers' range. Pro Photo is mainly just crazy in this context. Most web pages exclaiming the joys of Pro Photo use insanely pathological test images.

Some relatively common spaces:

- sRGB (1995) is geared for monitors, and was made to standardize image delivery on the web. It has a relatively fairly small gamut.

- CMYK is geared for print and is modeled on some inks. It has a larger gamut than sRGB, and one that approximates most printers well.

- ColorMatch RGB seems similar to sRGB, possibly a little wider

- Adobe RGB (1998) is larger than sRGB, covering more saturation and more blues and greens. While Adobe RGB was made to approximate CMYK printability, a decent part of the difference with sRGB is actually unprintable.

- Pro Photo RGB is one of the largest available spaces, covering more saturation, most colors, and even colors we can't even see, let alone print.

Color spaces that are made for print tend to acknowledge there is only so much inks can reproduce and may model specific inks, spending more detail in likely colors than extremely saturated ones. Even paper's gamut can be measured - separately from ink, that is.

When using a DSLR camera you usually get a choice between sRGB and Adobe RGB. Pretty much every DSLR has a respectable gamut rather larger than sRGB, and often somewhat larger Adobe RGB, so if you want to preserve as much color as possible (and this applies mostly to quite saturated colors) while shooting JPEGs, select Adobe RGB. You can also use RAW, which stores sensor data - in other words, the data before the choice, so the camera setting is just a hint that tags along, and the choice of color space you save to when saving to something non-RAW is up to the RAW converter.

(This is also one of the few cases in practice in which choosing a color space actually matters, because most everything non-raw already had its color clipped by the gamut of its color space. Note also that all this is fairly specific to bright saturated colors)

Note that various simpler image viewers do not convert for color spaces, meaning they generally wash out images. Photo editors like Photoshop often do, of course.

See also:

- http://www.oaktree-imaging.com/knowledge/gamuts

- http://www.cambridgeincolour.com/tutorials/sRGB-AdobeRGB1998.htm

- http://www.camerahobby.com/Digital_GretagMacBeth_Eye-One.htm

CIE color space research

The Commission Internationale de l'Eclairage (CIE) has produced several standards and recommendations. Probably the most significant is XYZ, and the most functional things seem to be the CIE XYZ, CIE Luv and CIE Lab color spaces.

There are some other notable CIE happenings, such as the 1924 (pre-XYZ) Brightness Matching Function used to standardize luminance.

The next few sections are what I think I've been able to figure out, but might well be incorrect in parts:

(1931) CIE Standard observer functions

XYZ is based on the 'CIE standard observer functions' (or 'CIE Color Matching Functions'), released in 1931, based on about two dozen people matching a projected area of color (covering about 2 degrees of their field of vision) with a color they mixed themselves from red, green and blue light sources (with known SPDs).

There was another observer function released in 1964 (CIE 1964 supplementary standard colorimetric observer) based on new tests with about four dozen people, where the light covered 10 degrees of their field of vision.

The size is mentioned because the larger one is more appropriate for reactions to monotonous surfaces, and smaller areas more appropriate for detail, such as images.

The result for both observer functions is a transfer functions from energy to general human visual response.

(1931) CIE XYZ

XYZ was the first formal observer-based attempt at mapping the spectrum to a human-perceptual tristimulus coordinate space. It was (and stayed) a common and widely accepted reference space because for a long time it was the only useful standard.

CIE XYZ can be seen as a three-dimensional space, in which the human gamut is a particular shape, occasionally referred to as the color bag. Coordinates outside the color bag don't really refer to anything.

XYZ's dimensions are rather abstract, rather imaginary primaries, harder to think about than, say, RGB or CMYK. The coordinates were defined in a way to guarantee the following: ((verify) these)

- When the coordinates have about the same value (eg. x,y,z each 0.3), the SPD is fairly even, which we see as white.

- All coordinates are positive for colors possible in physical reality (was a useful detail for computation at the time)

- The Y tristimulus value is directly proportional to the luminance (energy-proportional) of the additive mixture.

- Chromacity can be expressed in two dimensions, x and y, as functions of X,Y,Z

- X,Y,Z coordinates range from 0.0 to approximately 100.0.

- x,y,z coordinates are a normalization (x=X/(X+Y+Z) and analogous), with practical values rangin range from 0.0 to about 0.8

- this definition also implies x+y+z=1, so that specifying two coordinates is a full color reference.

Unless specifically chosen and stated otherwise, CIE XYZ uses the 1931 'CIE 2° Standard Observer' data (and not the 1964 10° data), and so do color spaces which use XYZ as an absolute color reference.

One thing XYZ does not ensure is that you can measure perceptive distance in it. This was simply not a goal of the 1931 experiments. It has a disproportionally large volume (or area, in the color spade view) for green, for example. A fairly pathological case for the difference: Taking a decent set of colors pairs perceived as being equally perceptually distant, the largest of the distances (in XYZ space) is about twenty times as large as the smallest.

Chromacity/UCS diagrams

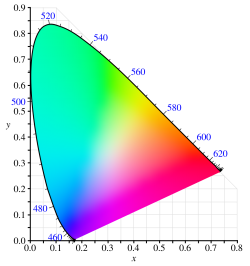

The chromacity diagrams mix bright colours in a roughly opposite way, with white in the middle, and are convenient for white points and primaries, but also for humans picking out (idealized) colors. They do not have brightness, which makes them incomplete as a total color reference.

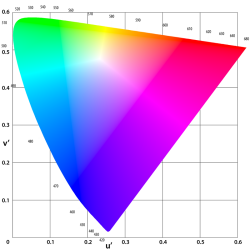

There are three distictly different colorful CIE diagrams that show clear colors, have one straight edge, and one curved one on which the ROYGBIV range is often marked out in wavelenths.

These are:

- The 1931 chromacity diagram looks like a curvy spade leaning to the left, and uses x,y coordinates (google image search)

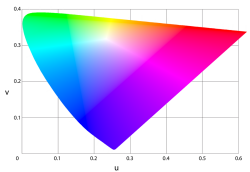

Two revisions, based on studies in these years (and both referred to as UCS):

- The 1960 CIE-UCS chromacity diagram, mosly scales/warps the 1931 one, and uses u,v coordinates. This one is less common to see than:

- The 1976 CIE-UCS chromacity diagram, much like the 1960 one, but more more accurately(verify), and uses u',v' coordinates. This one looks roughly like a triangle (google image search).

Yearless reference to 'CIE UCS' almost always refers to the 1976 version. Descriptions showing quotes/primes (u',v') indicates the 1976 one, but since not everyone puts them there, their absence does not necessarily mean the 1960 one.

See also various other sources, e.g. [1]

The 1931 CIE Chromacity diagram

The CIE chromaticy diagram uses x,y coordinates from the XYZ space. For completeness:

x = X / (X + Y + Z) y = Y / (X + Y + Z)

There is an xyY space based on this, adding luminance. This is a fairly convenient way to refer to a XYZ color without having to convert to and back from another space.

The color distance observation data, when plotted on this space, look like differently-sized ellipses, which means that using coordinate distances as a measure of color distances will be inaccurate, and noticably so.

The 1960 CIE-UCS Chromacity diagram

The CIE Uniform Chromacity Scale (UCS) originates in the endavour to create a perceptual color space, one in which coordinate distances are roughly proportional.

Among other things, it resulted in a revision of the the original chromacity diagram. It is mostly a rescaling:

u = 4X / (X + 15Y + 3Z) v = 6Y / (X + 15Y + 3Z)

The distance observations, when plotted on this diagram, are roughly circular, making it a little more accurate for (direct) color distance calculation.

Most people either consider the 1960 standard obsolete and use the 1976 version, or are unaware there are two UCS diagrams.

The 1976 CIE-UCS Chromacity diagram

There was another study in 1976, leading to the definition of:

u' = 4X / (X + 15Y + 3Z) v' = 9Y / (X + 15Y + 3Z)

...although it's regularly written without the quotes on the u and v.

As you can see, the only difference with the 1960 UCS is a correction for Y, which e.g. lets in some more green. This allows for better estimation of perceptual color differences based on just these coordinates.

(1964) U*V*W* color space

Based on the 1960 UCS.

Requires a white point

In part made it easier to calculate distances directly from the coordinates.

Now rarely used - later spaces like Luv, Lab perceptual distances more accurately.

The asterisks intend to signal the perceptual nature of these dimensions.

(1964) SΘW*

SΘW* (S, theta, W*, though also often written as SOW) is a polar form of U*V*W*

(1976) L*u*v* color space

L*u*v*, Luv, LUV and CIELUV refer to the same color space.

Essentially an update to U*V*W* since the UCS was updated. Also lightness is done slightly differently, and the whole is not a completely linear transform anymore.

Luv requires a reference white point -- though not everyone lists the choice of white point (leading to some incompleteness and even inconsistency in practice).

Given that:

- the white point is (Xn,Yn,Zn)

- the to-be-converted color is (X,Y,Z), for which you also calculate the (u',v') (with the '76 UCS)

If Y/Yn <= 0.008856: # that number is (6/26)**3

L* = 903.3*Y/Yn

Else:

L* = 116 * (Y/Yn)^(1/3)

u* = 13 * L* * u'

v* = 13 * L* * v'

For the set of all colors perceived as being equally perceptually equally distant, the largest according numerical distance in this space is about four times as large as the smallest. Not perfect, but a bunch better than XYZ.

(1976) L*a*b* color space

Created in 1976, and also referred to as Lab, LAB, CIELAB, CIE L*a*b*, and some other variations.

Lab is good at perceptive distancely, probably because it resembles opposite processing. This makes coordinates in this space less arbitrary than those in many other colour spaces.

Its gamut is larger than most other color spaces; it includes farily extreme saturation.

Lab is probably the CIE space most actively used today. other than XYZ for reference and color correction software, much of which I believe uses one XYZ-derived space or another} For example, photoshop supports working in Lab, and some corrections work slightly better/easier in it, for example changing luminance contrast without changing colors.

Lab is derived from XYZ and (like Luv) you have to choose a white point for the conversion. As far as I can tell, D50 is preferred and D65 is fairly commonly seen too.

Given Xn,Yn,Zn as a reference white, conversion from an X,Y,Z coordinate to a L*,a*,b* coordinates (the basic definition of the color space):

function f(x):

If x<=0.008856:

return (7.787 * x) + (16/116)

Else:

return x^(1/3)

If Y/Yn>0.008856:

L* = (116 * f(Y/Yn)) - 16

Else:

L* = 903.3 * (Y/Yn)

a* = 500 * ( f(X/Xn) - f(Y/Yn) )

b* = 200 * ( f(Y/Yn) - f(Z/Zn) )

L is ranged from 0 to 100. a* and b* are not strictly bounded, but most everything interesting is inside the -127 .. 128 range (and outside that, the gamut ends somewhere, and not in a regular shape; it's something like a deformed color bag)(verify). It was designed for easy coding in 8 bits, and doing so without thinking about it too much cuts off some extremely saturated colors (apparently nigh impossible to (re)produce in reality (verify))

See also:

In storage form

Lab itself is described using real/floating values. For images, you're likely to use one of the following (rescaled) integer forms:

CIELab:

CIELab 8 CIELab 16 L* unsigned 0 to 255 0 to 65535 a* and b* signed -128 to 127 -32768 to 32767

ICCLab:

ICCLab 8 ICCLab 16 L* unsigned 0 to 255 0 to 65280 a* and b* unsigned 0 to 255 0 to 65536 (multiplied by 256, centered at 32768)

Seen in use:

- variations like ITULab (8), and perhaps others.

- monochrome images, with only the L* image

Hunter Lab

Hunter lab, from 1966, is a non-CIE space similar to CIELab, but with somewhat different calculations.

The largest difference is that the blue area is larger, and the yellow area is smaller.

Other CIE spaces

'LCH'

'LCH' is an easily confusable name.

This name can be used generically, referring to either of the below, in which you can say LCH is often calculated from Lab, but can also be calculated form Luv; see e.g. this.

Some people mean LCH to refer to one of the below -- often the Lab-based one.

Both are primarily a transformation that takes Lab/Luv's perceptive distances,

and makes it more intuitive use in some contexts.

For example, it allows GUI color pickers to make more sensible to our human eyes. It can also be useful when expressing color difference.

See e.g. [2] or perhaps [3] page.

CIELCh / CIEHLC

The polar/cylindrical form of Lab.

C*ab = sqrt( (a*)2 + (b*)2 ) h°ab = atan( b* / a* )

See also:

CIELChuv / CIEHLCuv

The polar/cylindrical form of Luv.

C*uv is the chroma, huv the hue.

Calculated like:

C*uv = sqrt( (u*)2 + (v*)2 ) huv = atan2( v*, u* )

See also:

LSH

LSH is similar to LCH, but uses the definition of saturation instead of that for chrominance. (verify)

More color spaces, some conversion

Note on conversion

Space-to-space conversion can be a complex process. While the the formulae converting coordinates in one type of space to another are fairly standard, these formulae often work on linear data, while many practical files use non-linear (perceptual) variation of data. This often calls for two steps.

There is also often an issue that the spaces use different illuminants. This often forces the conversion to go via XYZ, in which that particular conversion is easier to do.

For example, to go from sRGB (in D65) to Pro Photo RGB (in D50), you need five steps:

- sRGB's R'G'B' to linear RGB

- linear RGB to XYZ

- Correct for the illuminant in XYZ (Chromatic adaptation)

- XYZ to linear RGB

- RGB to Pro Photo's R'G'B'

(where the quotes indicate coordinates in a nonlinear scale. Note that there are inherent inconsistencies between notations such as the different scales (linear vs. gamma corrected) channels/coordinates are in. Copy-pasting without knowing what things mean is always a good way to let errors in.)

A system may choose to implement multiple steps (particularly common ones) in a single function, of course, but that function would still be conceptually based on a conversion model with more steps.

Various conversion pages will mention conversion to XYZ, and regularly give relevant white points and primaries in XYZ too. XYZ is regularly used as the most central space, not so much because it's the only one or the most efficient, but more for reasons like that it's the easiest intermediate that many things are referenced against. Things like white point adaptation (regularly necessary) is also easier to do in a non-specific space such as this.

Simple conversions formulae online are often just remap the primaries, and don't care about white point. In some cases, particularly conversions to and from RGB, they may even omit which RGB standard they use.

Types of color space

RGB

RGB is more of an idea of than an implementation of a color space.

It's the concept of additively mixing from red, green and blue primaries. To be an absolute color space, you need more, primarily absolutely referenced primaries (often given in XYZ), and a reference white point (commonly one one of a few well known standard ones, again often given in XYZ).

Concrete implementations of RGB that do that (mostly in digital images) include:

- sRGB

- Adobe RGB

- Apple RGB

- ISO RGB

- CIE RGB

- ColorMatch RGB

- Wide Gamut RGB

- ProPhoto RGB, also known as ROMM RGB

- scRGB (based on sRGB, allowinr wider number ranges for a wider gamut - in fact being 80% imaginary)

- ...and others.

There are also standards that come from and are mostly just seen in video reproduction, including:

- SMPTE-C RGB

- NTSC RGB

- That defined by ITU-R BT.709 (seems to be used more widely, though)

- That defined by ITU-R BT.601

- ...and others.

(TODO: some of those are probably redundant)

Standards and image files tend to involve gamma information as well, either as metadata to go along with linear-intensity data, or sometimes pre-applied.

Various webpages may offer formulas for 'XYZ to RGB,' which doesn't actually mean much unless you know which RGB (perhaps this is usually sRGB?(verify)).

See also:

sRGB

See also:

Hue, Saturation, and something else (HLS, HSV)

HSV and HSL are two things commonly at the base of color pickers.

Not really corrected for perception, but better than nothing and RGB.

You'll see:

- Hue, Saturation, Value (HSV)

- Hue, Saturation, Lightness (HLS and HSL)

- Hue, Saturation, Brightness (HSB) seems to be another name for HLS/HSL (verify)

and sometimes:

- Hue, Saturation, Intensity (HSI)

These are similar but not all created equal. (The names are also not always used correctly.)

They share the fact that they have a cyclic spectrum (or rather, RYGCBM) as their Hue component, and also that saturation is is the concept of how much a color is used.

- ...but the L in HSL/HLS is black...saturated...white

- meaning whites are mostly found where l>0.8

- and saturated colors are found around l=0.5

- ...whereas the V in HSV is more like the well-definedness of the color

- meaning whites are mostly found when both v>0.5 and s<0.2

- and saturated colors are basically those for v>0.6

HLS is one of various system useful because they separate of chroma and luma,

In transforms that are easier to express that way,

and in analyses in part because color noise and luma noise are different - color noise is often larger

See also:

Hue, Max, Min, Diff (HMMD)

Uses: Analysis (only)

A color summary, not a color space, in that you can't convert back. (verify) (of the color spaces, it probably most closely resembles HSI)

Used in MPEG-7 CSD (Color Structure Descriptor).

The components are:

- Hue

- Max(R,G,B)

- Min(R,G,B)

- Max-Min (note that this is redundant information)

- There is also sometimes a fifth, Sum, defined as (Max+Min)/2

Video

Most analog video splits information into one luma channel (the black and white signal) and two chrominance channels (the extra data that encodes colour somehow).

This split came from the evolution of colour in TV signals - at the time it was useful to have a signal with color sent separately enough that older black and white TVs would only use the luma and not break when the new signal was sent.

It makes sense beyond that historical tidbit, though. Most perceived detail comes from contrast information, and most of that sits in the luminance channel, so if limited bandwidth means you need to drop some detail, dropping some of the chrominance detail doesn't look as bad as dropping luminance detail.

The way the chrominance channels are coded differs between standards.

Because of RGB nature of screens, most are defined based on RGB and are fairly simple transformations from/to RGB, so can be seen as just a perceptually clever way of encoding RGB.

Technically, standards tend to mention RGB primaries so can be considered to give absolute reference, but analog TV broadcasts as well as TVs often deviate from the standards, for example to bias the sent video or a given screen towards certain color tones, meaning that even if the method of transferring/storing video is well-referenced, the signal you're capturing may easily not be entirely true to the original.

Some systems include Y'UV, Y'DbDr, Y'IQ, Y'PbPr, Y'CbCr.

They are used in e.g.

- JPEG images (and some other image formats)

- analog TV: ((verify) the details below)

- digital video

- MPEG video, which includes things like DVD discs

- digital television, digital video capture

- ITU-R BT.601 describes digitally storing analogue video in YUV 4:2:2 (specifically interlaced, 525-line 60 Hz, and 625-line 50 Hz - so geared towards NTSC and PAL TV)

- ITU-R BT.709 is similar, but describes many more options (frame rates, resolutions - can be seen as the HDTV-style modern version)

Some notes on confusion on the terms:

- The primes/quotes (that denote nonlinearity of the Y channel) are often omitted because of laziness - but because of the perceptive coding, you pretty much always deal with luma rather than luminance, so it's never particulary ambiguous.

- YUV is regularly (and erroneously or at least ambiguously) used as a general term, to refer to this general color space setup, apparently, again because YUV is easier to type.

- A lot of video-related literature uses luminance both in its linear and non-linear meaning. The possible ambiguity isn't always mentioned, so you need to pay attention - even in standards.

See also:

- http://en.wikipedia.org/wiki/YUV

- http://en.wikipedia.org/wiki/YIQ

- http://en.wikipedia.org/wiki/YDbDr

YCbCr and YPbPr

These two are exactly the same color space, in two different contexts:

- YCbCr refers to the digital form, used in digital video

- YPbPr refers to the analog form, e.g. used in component video

In formulas,

- Y' is ranged from 0 to 1 (this luma is calculated the BT.601 way(verify)),

- both Pb and Pr from -0.5 to 0.5.

In digital form there are a few possible intermediate forms, but commonly each channel's value is a byte.

Particularly in video these are often scaled not to the full 0-to-255 range but with headroom and footroom:

- often scaling Y's 0..1 to to 16..235, and the chrominance channels's -0.5..0.5 to 16..240, centered at 128) (verify).

Not using the full range is a historical practice, mostly to avoid color clipping when piping video through several systems by analogue means (YPbPr).

However, the ~10% reduction of color resolution is a bit wasteful if the signal is digital all the way.

(While TVs are used to adapting to display what they get nicely, computer viewing may need tweaking to use the full range if the signal still has this headroom/footroom, particularly to make sure the darkest color is black and not a dark gray).

See also:

Chroma subsampling

Video formats (including TV) spend more space describing the Y channel than they use on each color channel. Because the human eye notices loss in luminance detail more than loss in color detail.

The selective removal of detail from chroma channels (both in analog and digital form) is known as chroma subsampling.

The most common forms in everyday hardware seem to be 4:2:2, 4:2:0, and 4:1:1. Note that those numbers are well-defined units defined and not just ratios, and some (like 4:2:0) have a number of details and/or variants.

Chroma subsampling is barely noticeable on photographic images, though makes hard contrast look worse, causing banding and bleeding. Since many compression formats use chroma subsampling, this matters in video editing in that you want to avoid subsampling in as many intermediate stages as possible, at least until you're creating a master (for a particular medium).

See also:

YIQ and YUV

YUV can refer to a specific color space, but is also frequently used to group YUV, Y'UV, YIQ, Y'IQ, YCbCr, and YPbPr, which all use a similar three-channel (luma,chroma,chroma) setup.

YIQ is a color space, the one used by NTSC, while Y'UV is similar and used by PAL.

YIQ and YUV have a very similar color range, but code it differently enough that they are not at all interchangeable. Both are linear transforms from RGB space, and are based on human color perception, but mostly in that they try to separate the most from least interesting information, so that it can use less bandwidth with limited perceptive loss (or rather, squeeze a little more quality out of the fixed amount of bandwidth).

Note that the first row in YIQ and YUV's from-RGB transform matrices (the luminance, black-and-white part of the transform) is identical in YIQ and YUV, and is the same as in ITU-601 and some other systems (see grayscale conversion). (some newer variants use ITU-709(verify))

CMY and CMYK

CMY and CMYK are color spaces based on the subtractive coloring that happens when you mix (specific) Cyan, Magenta, Yellow, and Black inks/pigments.

Digitally it's often the percentages of intended application of each ink, which is still fairly generic.

Keep in mind that these precentages don't necessarily translate perfectly or directly to every printing process.

Even so, its design means it's already considers printing more than most color systems, in particular in

the fundamentally different-shaped gamut, and in the way it deals with black (both perceptively and cost-wise).

Most CMYK-based processes are bad at creating saturated light colors, so CMYK in general often implies a relatively small gamut (compared to ).

This makes it less than ideal for certain uses, such as photo printing, though variations such as Hexachrome (CMYKOG) and CcMmYK help.

CMY-style systems are often specifically CMYK, where the added K is black ink (K, 'key'). Since black and dark regions are fairly common, having black ink means less ink is spent than if you mixed C,M,Y to get sufficiently dark colors.

Also, (100%,100%,100%,0%) C+M+Y isn't the same shade as just K (0%,0%,0%,100%). While the specific pigments and process vary the details, CMYK(0,0,0,100%) is usually a dark gray. It's black enough for some needs (take a look at newspaper text, for example), but is less ideal in the context of photos, gradients and such.

To produce what is known as rich black, processes often apply all pigments (CMY, then K on top).

You may guess that CMYK(100,100,100,100) is very black - and it is, but in most cases is also a waste of ink, and may lead to paper wetness and ink bleeding problems. (This black is also known as registration black, because it's usually only used in the registration marks, the blocky things in a corner used to check whether different passes align well)

It turns out that a more economical way of mixing the darkest black you'll usually discern varies, but usually uses about 90% to 100% of K, and 50% to 70% of the color channels (depending on the ink/pigment). Specific referenced systems have rich black defined as, say, (75,68,67,90) or (63,52,51,100) or whatnot, values which may be seen mentioned with some regularity (those two are Photoshop conversions from RGB(0,0,0) under two different CMYK profiles). Designers may wish for a cooler (bluer) or warmer (redder) variant of rich black (often mostly C+K and M+Y+K combinations, respectively).

Note that in terms of total ink coverage, this totals to about 300%. Rich blacks are rarely more than that, to avoid wetness and bleeding problems. (Registration black is sometimes referred to as 400% black.)

On computer screens, all these blacks will usually look the same, because most screens are not very good at producing black in the first place. This means that carelessly combined blacks may print (in most processes) with ugly transitions, for example boxes that represent areas you pasted in from different sources.

Gradients to black are also a potential detail, as fading to a non-rich black is quite noticeably different than a fade to rich black. It is not only lighter but with also often look banded, and will seem to fade via a gray, or slightly wrong-colored color. Fading to a rich black is usually preferable.

Note that gradients to 100% K may also display some banding in print processes, which is why a rich black with at most ~90%K may actually be handier.

Another common special case is text. A separate pass for text can in many processes be practical (possibly even with a separate black ink to avoid K's grayness), as you can create consistently sharp text out of a single pass, and avoid trapping / registration details that you would get from mixing text black from more than one color, and which is more noticeable in sharp features such text (sometimes much).

Related reading:

- http://en.wikipedia.org/wiki/CMYK

- http://en.wikipedia.org/wiki/Rich_black

- http://en.wikipedia.org/wiki/Registration_black

- http://en.wikipedia.org/wiki/Spot_color

- http://en.wikipedia.org/wiki/Trap_(printing)

- http://en.wikipedia.org/wiki/Hexachrome

- http://en.wikipedia.org/wiki/CcMmYK_color_model

YCoCg

YCoCg a.k.a. YCgCo is a simple linear transform (e.g matrix multiplication) from RGB into Y, chrominance green (Cg), and chrominance orange (Co).

It is used in video and image compression, and you may find it a slightly faster alternative to, basically, other YCC-category color spaces.

https://en.wikipedia.org/wiki/YCoCg

ΔE* and tolerancing

The ΔE* measure, also written as deltaE*, dE*, dE, is a measure of color difference.

Originally the typical reason to calculate delta-E was tolerancing, the practice of deciding how acceptable a produced color is, how close it is to the intended color.

It is often suggested that the threshold at which the human eye can discern differences is approximately 1 ΔE*, so this can be used as the threshold of acceptability.

However, since there are a number of different systems, the degree to which ΔE* actually correlates with our perception varies.

History:

- Initially, dE (CIE dE76) was a simple distance

- considered flawed because the CIELAB it was based on was not as perceptually uniform as originally thought

- CMC l:c (in 1984) allows a more tweakable model, specifically to weigh luminance and chrominance,

- ...which was also adopted in CIE dE94

- (the difference is not large, though CMC is targeted at and works a little better on textiles, while dE94 is meant for paint coatings).

- CIEDE2000 further corrected for the perceptual uniformity issue

The simplest way to express tolerance in to specify the amount the value in each dimension may differ (you will see dL, da and db for Lab, dL, dC and dh for LCH).

Note that in various cases you may be interested only in the two chrominance dimensions.

We tend to see differences in hue, then chroma, then lightness. Because of this, LCH based tolerancing can be more useful than Lab-based tolerancing, even if the actual measure is the same (though not the shape/size of the area around it if you tolerance in a 'plus or minus 0.5 in each dimension' sort of way).

CIELAB- versus CIELCH-based ΔE

There is no difference in the resulting ΔE when you calculate it from CIE Lab color or CIE LCH, though the actual calculation work is a little different.

The per-dimension component deltas for LCH are somewhat more human informative: dL represents a difference in brightness, dC represents a difference in saturation, dH a color shift.

The per-dimension component deltas in Lab are less intuitive, since you have to know that da means redder (positive) or greener (negative) or that db means yellower (positive) and bluer (negative), though arguably it's more directly useful for color blindness tests.

dE76

The original measure comes from CIE, and is based on L*a*b* (and released in the same year), and is simply the Cartesian distance in that space:

dE*ab = sqrt( dL2 + da2 + db2 )

This is normally just called dE* without the ab.

One criticism is that we are not equally sensitive to luminance and chrominance, so the equal weighing of all dimensions is somewhat limited.

CMC l:c, dEcmc

Released in 1984 by the Colour Measurement Committee of the Society of Dyes and Colourists of Great Britain.

Based on L*C*h

Allows relative weighing of luminance and chrominance, which is useful when tolerancing for specific purposes. The default is 2:1, meaning more lightness variation is allowed than chroma difference - specifically twice as much.

Its tolerance ellipsoids are also distributed

http://www.brucelindbloom.com/index.html?Eqn_DeltaE_CMC.html

dE94

Released by CIE, and rather similar to the CMC tolerancing definitions.

Based on L*C*h (though takes L*a*b* values?(verify))

http://www.brucelindbloom.com/index.html?Eqn_DeltaE_CIE94.html

dE2000

CIE's latest revision, and one still under some consideration.

Based on L*C*h